CUTTER IT JOURNAL VOL. 29, NO. 7

It is well known that social engineering attacks are designed to target the user-computer interface, rather than exploiting a system’s technical vulnerability, to enable attackers to deceive a user into performing an action that will breach a system’s information security. They are a pervasive and existential threat to computer systems, because in any system, the user-computer interface is always vulnerable to abuse by authorized users, with or without their knowledge.

Historically, social engineering exploitations in computer systems were limited to traditional Internet communications such as email and website platforms. However, in the Internet of Things (IoT), the threat landscape includes vehicles, industrial control systems, and even smart home appliances. Add to this mix naive users and default passwords that are extremely weak and easily guessed, and the threat becomes greater. As a result, the effects of a deception-based attack will now no longer be limited to cyberspace (e.g., stealing information, compromising a system, crashing a Web service), but can also result in physical impacts, including:

- Damage to manufacturing plants

- Disruption of train and tram signaling, causing death and injury

- Discharge of sewage from water treatment plants

- Damage to nuclear power plants (e.g., Stuxnet)

In December 2014, a German steel mill furnace sustained damage when hackers used targeted phishing emails to capture user credentials, thereby gaining access to the back office and ultimately the production network, with devastating consequences. Another example occurred when households in Ukraine suffered a blackout on 23 December 2015 caused by an attack that brought down the power grid. Again, the attackers used phishing emails to trick users at the electric company into clicking on an attachment in an email, ostensibly from the prime minister of Ukraine. This is thought to be the first cyberattack that brought down an entire power grid, leaving 225,000 homes without electricity.

The more effective such cyber-physical attacks prove,1 the more the deception attack surface continues to grow. For example, in the near future, fake tire pressure alerts shown on a car’s dashboard or gas leakage warnings on a smart heating system’s GUI may be used to achieve deception in a manner not too dissimilar to current scareware pop-up alerts experienced by today’s mobile and desktop users. In the extreme, attackers may even begin to target medical devices (e.g., pacemakers or mechanical insulin-delivering syringes) via near-field communications or wireless sensor networks, in an approach analogous to ransomware. This has already occurred through the IoT using conventional hacking techniques (i.e., SSH vulnerabilities and unpatched systems with default hardwired passwords) and is commonly known as a MEDIJACK attack. The major problem with these devices is that they remain unpatched throughout their lifetime, and at the moment this is also the situation within the IoT. Figure 1 provides a snapshot of the potential IoT social engineering threat space.

Would Your Fridge Lie to You?

Prior to the advent of the IoT, an email or instant message purporting to originate from your fridge would seem ludicrous. Nowadays, however, the concept does not seem so absurd. In fact, it is exactly this change in our expectations about the way we use technology and the increasing capabilities of system-to-system communication that poses the most risk. Today’s users expect greater visibility and control over their environment, leading to a proliferation of distributed interfaces attached to what were traditionally isolated systems, sharing new types of data across a cyber-physical boundary. The result is an ever-richer user experience, but also an augmented attack surface at the disposal of willing cybercriminals. And as cybercriminals tend to go in search of low-hanging fruit in order to exploit a system, the user is now more than ever a soft target.

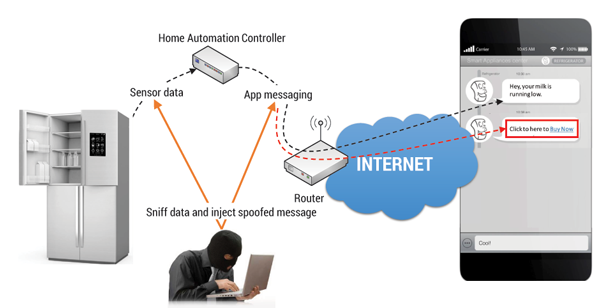

Since attackers may not always have physical access to IoT devices to exploit them directly, they can instead target the distributed functionality and associated behavior integrated into new and existing systems. For example, it would not be unreasonable to imagine an attacker crafting a spoofed instant message from a user’s refrigerator (see Figure 2), reporting that it is running low on milk and asking whether the user would like to place an order with an Amazon-style “one-click” ordering button — which conveniently leads to a drive-by download. But how did the attacker know the user’s milk was low? Well, in the IoT they simply sniffed seemingly unimportant, unencrypted sensor node data sent from the fridge to the home automation controller, which connects to the user over the Internet via their home broadband router. Here, the attacker has exploited platform functionality that interfaces with the IoT device (in this case, a fridge) by manipulating the perceived behavior of the system as opposed to the device itself. In practice, such an attack can lead to a conventional exploitation such as system compromise or theft of banking credentials. It is not a great leap to envision that your fridge could be held to ransom by ransomware. Pay up, or your fridge won’t turn on.

Unlike phishing emails claiming to originate from financial institutions and banks (which have existed for nearly 30 years), users are not sensitive to malicious behavior originating from home/city automation systems, smart devices, or social media platforms that provide access to e-health, emergency, or public services. To a large extent, this is because the physical appearance of such systems does not require significant change to become compatible with the Internet of Things; normally it is only the data these platforms generate that is shared. Specifically, the IoT is enhancing data accessibility, which is further augmenting the attack landscape for cybercriminals seeking to develop convincing social engineering attacks.

Data Leakage: No Data Is Too Big or Small

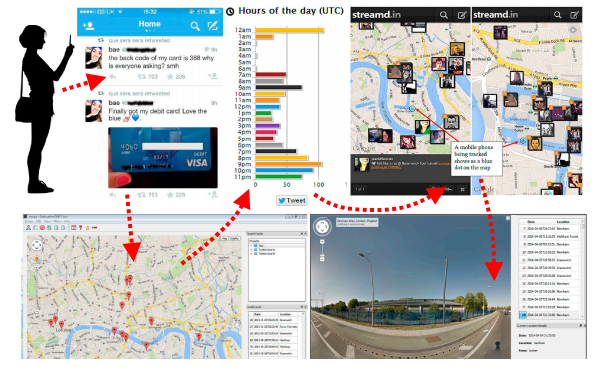

Just as the IoT expands the different types of user interfaces that attackers can target, the different types of data (previously hidden from attackers) that can be acquired is also increased. It is well known that attackers are adept at gathering user data and utilizing this information as a mechanism to target a user and better design an attack specific to the user’s system or improve the credibility of the deception techniques used. Nowadays, hackers use social networks to obtain personal data about a user, such as their children’s names, pet’s name, date of birth, where they graduated, and so on. By detecting and exploiting systems that are of high value and using their target’s “pattern of life” data, cybercriminals can develop effective deception mechanisms by manipulating information the user has shared and is therefore very familiar with and unlikely to repudiate. Data leakage is exacerbated when geolocation is turned on in IoT devices (see Figure 3). This enables anyone to determine the exact location where a smartphone picture was taken, for example, which can be a problem if this identifies the user’s home and they have just tweeted that they are going away on holiday. Burglars use Twitter as well!

Recent research by the C-SAFE team at the University of Greenwich has demonstrated the ease with which an individual can be profiled through their leaked personal data using only social networks (Facebook, Twitter, LinkedIn, Instagram, etc.). Researchers undertook a series of experiments to determine how much information they could extract about three subjects using only social networking sites. By utilizing three freely available tools (Twitonomy, Streamd.in, Cree.py) that harvest information from Twitter, the data revealed where the three subjects lived and worked, the route they took to work each day, where one subject’s parents lived, and even where and when another subject went to the gym. It was also possible to follow each of them through cyberspace to other sites such as Facebook, LinkedIn, Foursquare, and Instagram, where information missing from their “profile” was quickly filled in. The experiment demonstrated how easy it is for cybercriminals to gather personal data to construct social engineering attacks that an individual would find credible.

“Smart”er Attacks

Social engineering attacks against IoT devices are by no means hypothetical, and exploitations abusing functionality in smart devices have already been observed in the wild. For example, from December 2013 to January 2014, security provider Proofpoint detected a cyberattack that was originating from the IoT, where three times a day, in bursts of 100,000, malicious emails targeting businesses and individuals were sent out. In total, the global attack consisted of more than 750,000 malicious emails originating from over 100,000 everyday consumer gadgets, 25% of which originated from smart TVs, home routers, and even one fridge. Crucially, the attack demonstrated that botnets are now IoT botnets, capable of recruiting almost any device with a network connection and messaging software.

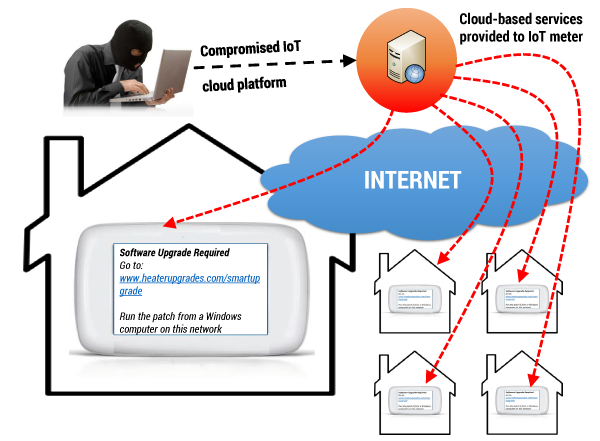

Attack Case A: IoT Phishing in Smart Homes

Smart homes are becoming more common as people connect up numerous devices and “things” within their home. All these IoT things and devices connect to a network, be it wireless or wired, and eventually connect to a routing device. Individually they may not offer any obvious value to cybercriminals, but they can provide a user interface that an attacker can manipulate to execute a social engineering attack. The following attack considers a threat actor who has gained control of a brand of IoT smart meter cloud-based services platform, bundled with the product to deliver updates or new content. Here, the attack can either monitor (what may be) unencrypted communication between the cloud services and the smart meter and inject information into existing data flows, or potentially send direct messages to the meters if the attackers have gained complete control over the cloud environment. In both examples, the attack triggers the following message to all smart meters when the heating sensor indicates that the users are home (e.g., it has been turned up/down): “Software Upgrade Required. Go to: www.heaterupgrades.com/smartupgrade. Run the patch from a Windows computer on this network.” (See Figure 4.) If the user complies, then they have been phished.

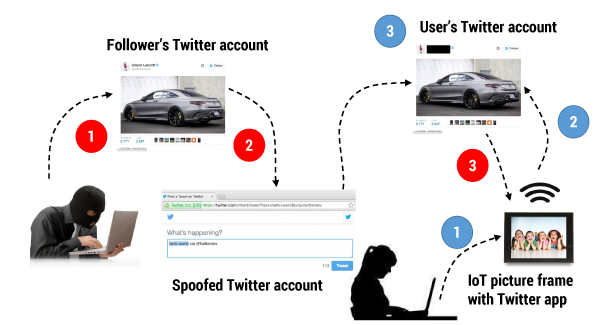

Attack Case B: The Internet of Social Things

Social networking and media are at the heart of the IoT, where it is no longer only people that share information with other people, but also things that are able to communicate with users or with other things. Think back to your fridge kindly advising that you are low on milk. Your car might even want to tell your Facebook friends that its carbon footprint is less than four other cars on the road this week (i.e., in-product advertising across social media). The following attack considers a threat actor scanning Twitter and looking for status posts that include metadata from IoT picture frames. IoT picture frames often come bundled with an app that allows their user to automatically download and upload pictures to popular social media platforms. In this example, the attacker finds a tweet containing the metadata; however, it is a retweet from an open Twitter account following a particular user who owns the target picture frame. Next, the attacker sends a direct tweet to the user (whose account privacy settings were locked down) from a spoofed Twitter account purporting to be the picture frame’s manufacturer. The tweet contains a shortened URL to a Twitter app that will allow the user to install video functionality on their picture frame for free. In reality, the Twitter app gives the attacker’s account rights to download all the pictures from the user’s IoT picture frame, which the attacker can then use as ransomware data or to craft future phishing attacks (see Figure 5).

Social Things contagion to deliver a social engineering attack.

Defense Recommendations

In order to instill confidence in the smart technologies that underpin the IoT, encourage their uptake, and ensure that they will be usable in the long term, it is necessary for the security of these devices to be robust, scalable, and above all practical. Here, we explore four approaches to defending the IoT against social engineering attacks.

Generic Attack Classification

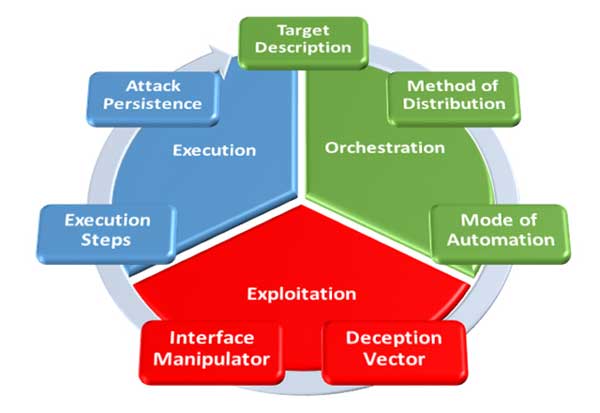

Since deception-based attacks in the IoT can be launched in either cyber or physical space, identifying the source of a deception attempt and the structure of a social engineering attack can be extremely difficult. For developers, the challenge of building an effective defense that addresses a range of deception vectors would appear insurmountable when we consider all the different platforms that may been involved in an attack. It is more practical to employ generic classification criteria to break down attacks into parameterized, component parts. This approach can be used to reveal shared characteristics between attacks, which then aids the design of defenses that address multiple threats sharing similar traits. Using the taxonomy proposed elsewhere by Ryan and Guest Editor George Loukas, and summarized by each root category in Figure 6, the following recommendations can help developers capture the multiple variables involved in the construction, delivery, and execution of a social engineering attacks by applying criteria that are independent of the attack vectors used.

for social engineering attacks in the Internet of Things.

Orchestration

Target Description (TD). How is the target chosen? Determine an attack’s targeting parameters to define which user and/or system features a defense system should focus on. A targeted attack is likely to exploit a specific user’s attributes leaked by their IoT footprint (e.g., a toll payment spear phishing email based on tweets mapped to the geolocation of their vehicle) as part of the deception. In contrast, promiscuous targeting is opportunistic and random (e.g., an attacker plants a malicious QR code in a shopping center).

Method of Distribution (MD). How does the attack reach the target? Investigate the method in which the attack’s deception is distributed and where it is executed to identify the platforms that are involved in the attack. Determine whether it is a remote system (hence involving a network) or a local system that requires monitoring and defending.

Mode of Automation (MA). Is the attack automated? Recognizing whether an attack is automatically or manually executed will help determine the most suitable response mechanism and the type of data that can be meaningful to collect about it. It may be possible to fingerprint a fully automated attack based on patterns of previously observed behavior, while a fully manual attack may need to focus on the attacker’s behavior instead.

Exploitation

Deception Vector (DV). Is it looks or behavior that deceives the user? A defense mechanism needs to pinpoint mechanisms by which an attacker can deceive the user into a false expectation by manipulating visual and/or system behavior aspects of a system. Within the IoT, it is not just GUIs that can be abused, but the physical appearance or state of a sensor node in a home/work/city automation system as well (e.g., heating thermometer, heartbeat monitor, vehicle speed, traffic lights).

Interface Manipulator (IM). Is the platform used in the deception only (ab)used or also programmatically modified? Depending on the system involved in an attack, it may be impractical or impossible to patch directly (e.g., pacemaker, legacy actuator). In order to reduce the scope of a defense, developers need to establish whether the deception vector in an attack occurs in code (e.g., embedded within the system or external) or abuses intended user space functionality built into the platform by design.

Execution

Execution Steps (ES). Does the attack complete the deception in one step? Model the effect that a single user action can have on the integrity of a platform, as it may be necessary to build in extra user authentication steps to commit actions, especially in e-health services or industrial control systems. An attack that relies on multiple user response steps may be detected earlier and more easily than a single-step attack, and before it completes, by looking for traces of its initial steps.

Attack Persistence (AP). Does the deception persist? Persistent attempts can be modeled by a learning-based defense system to identify the deception’s pattern of behavior in order to block it. At the same time, it may also have a higher chance of success against the target. One-off deception attempts are by definition more difficult to detect and may be missed if a defense is only looking for patterns in system behavior or if the pattern is as yet unknown (i.e., a zero-day vulnerability).

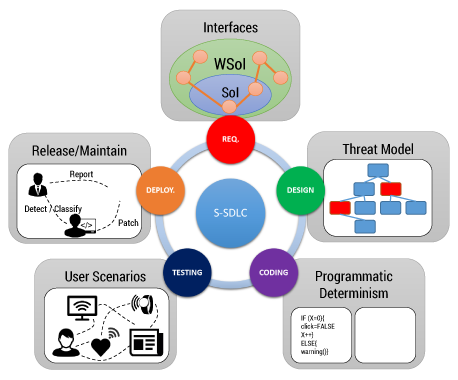

S-SDLC

It is important that IoT platform developers have a detailed understanding of how their system will interface with users, as well as how system functionality may affect the wider ecosystem in which the system may be deployed. The Secure Software Development Life Cycle (S-SDLC) provides developers with a guideline framework for the design and implementation of system software by integrating security considerations systematically into the core requirements and design of the software’s architecture. Within the S-SDLC framework (see Figure 7), in each lifecycle stage, the following key concepts can aid the development of IoT platforms and functionality that are resistant to deception-based attacks.

developing resistance to deception-based attacks in the IoT.

Requirements

Identify the attack surface for an IoT platform by clearly defining the intended functionality and its expected limitations. Document the system-to-system and system-to-user interfaces forming the overall system of interest (SoI) and determine how these communicate and affect interfaces within the wider SoI (WSoI; e.g., the deployment environment).

Design

Develop threat models that run through different features of the platform’s design and WSoI interactions. Pinpoint weak spots in the user interface that can be abused or vulnerabilities in data transfer and network communications that may allow attackers to inject malicious data or code or gather information about the user.

Coding

Employ static code analysis to determine whether the platform’s programmatic features are deterministic to ensure spoofed or injected data does not force the platform to exhibit a deceptive behavior toward the user. Similarly, evaluate user interface controls (whether graphical or physical; e.g., a button) to ascertain whether these can be (ab)used through intended functionality.

Testing

Design and implement scenarios where different user behavior is arbitrarily executed (e.g., fuzzing) in order to identify anomalous situations when the user interface or functionality can become part of a deception-based attack. In testing, developers should generate and execute random input parameters, physical and logical, against the IoT platform in an attempt to elicit unhandled or anomalous behavior that may lead to exploitable vulnerabilities.

Release/Maintain

Establish monitoring or reporting functionality within the platform deployment environment to help detect attacks. This will facilitate continuous patching and security hardening of the specific platform and/or external platforms that have lower-security features.

Attack Classification and Defense

By applying each taxonomy criterion against each of the two IoT attack cases, we can use classification to employ S-SDLC principles that help suggest a single approach to defense that would prevent both attacks.

Case A

TD. Promiscuously targets any user who owns the smart meter, by flooding connected devices with messages and commands (e.g., malicious updates) via the cloud.

MD. Distributed to execute the deception via local software on the smart meter.

MA. Functions as an automated message sent from the cloud-based service.

DV. Deception is both cosmetic and behaviorally convincing, as the user would expect communications from the cloud platform.

IM. Injecting malicious messages through the cloud attacks the programmatic interface of the smart meter by adjusting the internal code to display a deceptive message.

ES. The user must exercise multiple steps in order for the deception to be successful. The first step downloads the supposed patch; the second step then requires the user to install the patch.

AP. The message’s particular deception is one-off, as it is unlikely the attacker will reissue the same phishing message and thus compromise the attack’s integrity.

Case B

TD. Promiscuously targets any user who owns an IoT picture frame with social media app functionality.

MD. Distributed to execute the deception via remote software on the Twitter platform.

MA. Functions as a manual operation by searching for tweets, then creates a custom Twitter account and tweets once a target is found.

DV. Deception is behaviorally convincing, as product suppliers often communicate with customers via social media so as to gain customer data analytics. It is unlikely the Twitter account is visually credible (e.g., there are few or no followers, and as the account is not official, tweets are not authenticated — no blue tick!).

IM. Here the attacks simply (ab)use the user interface functionality of the Twitter platform.

ES. The deception completes in multiple steps, as the user must click on the URL and then add the malicious Twitter app permissions to their account.

AP. The message’s particular deception is one-off as it is unlikely the attacker will reissue the same phishing message and thus compromise the attack’s integrity.

By applying the taxonomy classification to each attack case, we can establish that a number of similar traits are shared in the orchestration, exploitation, and execution phases of these attacks. First, both attacks target users promiscuously, so it would appear the attacker is seeking to build the deception around a vulnerability in an IoT platform and its use case rather than a specific user’s platform profile. Both attacks are behaviorally deceptive, irrespective of whether they are visually convincing or not, and both attacks are one-off in their deception but require multiple user steps to complete the deception and exploitation. By showing that both attacks focus on the IoT product behavior, rather than the users, it becomes clear that the S-SDLC requirements and testing stages would play a pivotal role in helping to mitigate these attacks. Crucially, it is the system-to-system interfaces of each IoT platform and their interaction with the ecosystem’s WSoI (Case A: cloud-based services over the Internet, Case B: Twitter application add-ons) that need addressing.

Each of the IoT devices, their interface contracts between other IoT platforms/devices, and the functionality they extend should be clearly defined and then evaluated against different user deployment scenarios. In this way, developers can pinpoint specific functionality supplied by the system that is vulnerable to manipulation. Here, the manipulation of features supplied by the IoT devices in each attack case could easily be highlighted by reviewing each interface contract, then conducting a robust test of its functionality in different user deployment scenarios. Since both attacks’ deceptions are one-off, they may be hard to identify and prevent; therefore, it is even more important to rationalize system interface requirements before providing the users with functionality that the developers are not able (or willing) to protect. Where each attack requires multiple user steps to complete, integration of further authentication mechanisms for more significant functionality requests between interfaces should be enforced and reviewed through testing. This approach can help to determine whether extra security procedures should be enforced before a user commits a potentially compromising action (e.g., forcing a user to review a warning or confirm their identity through multi-factor authentication).

User Susceptibility Profiling

In order to provide a robust defense against social engineering attacks, responsibility cannot be laid solely upon the shoulders of system developers or the organizations that provide access to a computer system, whether that is an IoT platform connected to the Internet, a LAN, or a near-field communications medium. The users of the system are just as important, if not relied upon even more to act and use the computer securely to ensure that their actions do not inadvertently result in information security compromise. Remember, there is no silver bullet for protecting against human error.

Identifying a key set of measurable user attributes can help to provide a basis for modeling which type(s) of user profiles are more or less likely to be susceptible to a deception-based attack. Such attributes could be used to define features for predicting and estimating user susceptibility when using a specific platform or range of platforms. Crucially, access to a user susceptibility profile provides the basis for applying a threshold at which the probability of user susceptibility triggers security-enforcing actions aimed at minimizing and/or mitigating exploitation.

Human as a Sensor (HaaS)

The concept of the human as a sensor has been employed extensively and successfully for the detection of threats and adverse conditions in physical space; for example, to report road traffic anomalies, detect unfolding emergencies, and improve the situational awareness of first responders through social media. In a similar manner, human sensing can be applied to detect and report threats in cyberspace as well. In fact, as the IoT crosses the cyber-physical boundary, the ability of users to report suspected attacks, both cyber and physical, may help to detect attacks initiated in one space that result in an effect on the other. In this respect, it then becomes particularly important to be able to tell to what extent users can correctly detect deception-based security threats, leveraging the intelligence provided by users to augment IoT cyber situational awareness.

Within a smart city, users are likely to be exposed to many different IoT interfaces, such as advertising, multimedia, and wireless multicast feeds in the local geographic area (e.g., local car park capacity, what’s on at the cinema, popular restaurants). Should any of these interfaces be targeted by an attacker using social engineering, users can play an important role in identifying deception attempts. In this example, the user can open their HaaS tool within their smartphone to report any suspected attacks, which can then be directly fed to the smart city security-monitoring system. Free car parking might even be an incentive for correctly reported attacks!

Conclusion

The IoT promises to synergize technology in new and innovative ways, and in doing so it presents major social, business, and economic benefits for modern society. Equally, for cybercriminals, the IoT promises significant rewards if they can execute a social engineering attack successfully, because hacking the user can provide access to all the “things” that they control. The more successful social engineering attacks against the IoT are, the more user confidence in its security is undermined, ultimately delaying adoption of the IoT and the realization of its potential benefits.

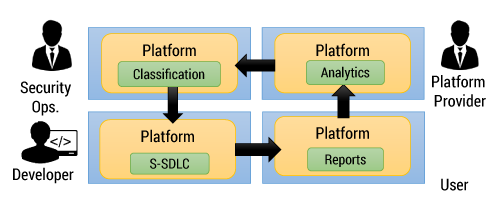

Fundamentally, protecting the integrity of the IoT is a two-way street. System developers should ensure that they employ best practice frameworks for producing secure IoT platforms. Security should be treated as an enabler of system functionality and not be a cost-based bolt-on or ignored completely. For their part, users are a crucial firewall in detecting social engineering threats in the IoT, and it is important that they be empowered to report potential threats, especially as they will be familiar with their own environment and more sensitive to its anomalous behavior. At the same time, it is helpful to be able to measure whether users will likely be deceived by social engineering attacks in an IoT ecosystem; therefore, as part of security awareness, it is crucial that the IoT be factored into training material. Finally, as shown in Figure 8, each of these approaches provides complementary tools that help provide a through-life defense architecture against social engineering attacks in the IoT.

of user interfaces in an Internet-capable platform.

To improve IoT security, system developers must empower user threat detection with a mechanism to report suspected attacks and review/analyze user reports to determine their credibility. If they decide an attack report is credible, they can then apply a generic classification to determine the key aspects of the attack and finally integrate these attack vectors as patch parameters within the platform “release/maintain” phase of the S-SDLC.

As cryptographer Bruce Schneier once said, “People don’t understand computers. Computers are magical boxes that do things. People believe what computers tell them.” Trust lies at the heart of securing the IoT against deception-based attacks, and thus in order to instill trust, it is device integrity that must be protected to prevent user compromise.

Endnote

1 Loukas, George. Cyber-Physical Attacks: A Growing Invisible Threat. Butterworth-Heinemann (Elsevier), 2015.