AMPLIFY VOL. 38, NO. 6

Contemporary discourse around AI is often infused with narratives that celebrate its boundless promise, its disruptive capacity, and its role in shaping competitive advantage. From financial services and healthcare to transportation and supply chains, AI has already demonstrated its capacity to reconfigure industries. However, this enthusiasm obscures a sobering truth that rarely receives adequate attention: AI systems are inherently dynamic; they are not static tools to be deployed once and trusted indefinitely.

Consider the parallels. When an airline invests in a new fleet, it models the lifespan of each aircraft, plans for maintenance cycles, and budgets for eventual replacement. When a hospital installs advanced diagnostic equipment, it tracks performance drift, schedules recalibrations, and anticipates obsolescence. When companies deploy AI models (e.g., fraud-detection algorithms, recommendation engines, customer service bots), these lifecycle dynamics are often ignored. This is particularly true for AI systems deployed and managed internally by the organization itself rather than as cloud-managed services.

Many AI capabilities are now accessed through managed cloud platforms (with providers handling model maintenance and updates), but this article focuses on enterprise-deployed, narrow AI systems that are typically managed in-house. These systems often support domain-specific use cases (e.g., fraud detection, diagnostics) and require the deploying organization to actively monitor performance, retrain models, and plan for obsolescence.

Recent research shows that AI models exhibit temporal degradation: performance decays over time as data patterns shift, environments change, or model assumptions erode.1,2 A high-performing model in January, for example, may be a silent liability by December. In some industries like finance or healthcare, this can cause lost business value, regulatory noncompliance, and reputational harm.

AI investments are growing larger and more embedded, but AI governance frameworks remain immature, particularly when it comes to tracking long-term asset health.3,4

Moreover, external forces accelerate AI aging. Regulatory landscapes shift (e.g., new EU AI Act requirements), and competitors launch disruptive models. Foundational shifts in AI paradigms, such as the rapid emergence of large language models, can cause once-leading systems to become outdated almost overnight. In this volatile context, executives must treat AI systems as living, dynamic assets requiring ongoing stewardship.

Failure to do so carries tangible business risk:

-

Financial risk — sunk costs in underperforming models

-

Operational risk — degraded customer experience, errors in critical processes

-

Compliance risk — outdated models violating new regulations

-

Strategic risk — losing market edge due to slow refresh cycles

Using Survival Analysis to Manage the AI Lifecycle

The longevity of AI systems has emerged as an under-explored yet strategically vital dimension of AI governance. Business investments in AI continue to accelerate, but mechanisms to anticipate and manage the degradation of these assets remain fragmented and inconsistent. This article proposes a conceptual framework that uses the well-established methodology of survival analysis to improve AI asset management.

Originally developed for biomedical and reliability engineering, survival analysis estimates the probability that a given entity will continue to function over time. In healthcare, it predicts patient survival; in engineering, it models the time until component failure.

Recent research proved its usefulness in domains involving time-to-event outcomes, including complex systems such as lithium-ion battery degradation and predictive maintenance in industrial fleets.5 We believe survival analysis can be successfully applied to AI systems — conceptualizing them as dynamic, degradable assets operating within evolving environments.

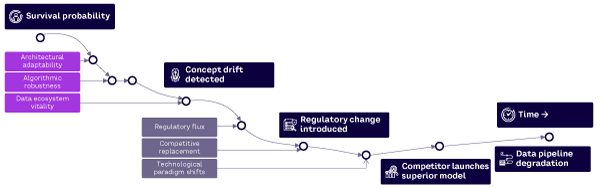

We model each AI asset (e.g., a deployed machine learning model) as a function S(t), representing the probability that the model continues to deliver acceptable performance at time t. The corresponding curve captures the evolving risk of obsolescence or failure as the system ages (see Figure 1).

Importantly, performance degradation in AI is typically multifactorial, driven by a combination of internal and external variables. Our framework incorporates both categories.

-

Endogenous factors (internal)

-

Architectural adaptability — the flexibility of a model’s architecture to accommodate new data or retraining cycles without catastrophic forgetting

-

Algorithmic robustness — the resilience of model performance under data drift (changes in the input data distribution over time), concept drift (changes in the relationship between inputs and outputs), and shifting feature distributions (e.g., variable ranges evolving due to environmental or user behavior changes)6,7

-

Data ecosystem vitality — the freshness, quality, and stability of input data streams, which directly affect model relevance and accuracy8

-

Exogenous factors (external)

-

Regulatory flux — changes in legal or compliance requirements that render models noncompliant or require recertification9

-

Competitive displacement — the emergence of superior models or market standards that diminish the relative value of an existing system

-

Technological paradigm shifts — foundational shifts in the AI field, such as the transition from narrow models to foundation ones, which can accelerate obsolescence

It is important to distinguish between two forms of degradation. Intrinsic degradation occurs when a model’s performance declines due to internal factors, such as data drift or architectural limitations. Relative degradation arises when a model becomes obsolete not because it performs worse than before but because superior alternatives emerge (raising the standard for what is considered acceptable or competitive). Both forms are critical to understanding AI asset survival and are integrated into our framework.

Survival analysis provides several advantages over conventional monitoring methods. First, it enables forecasting of AI asset longevity rather than reactive detection of failure. Second, it supports early-warning detection (identifying indicators that an AI system is entering a high-risk phase of degradation). Third, it offers a foundation for adaptive governance frameworks in which survival-informed insights guide maintenance, retraining, and retirement decisions.

Recent progress in deep learning–driven survival modeling has broadened our ability to forecast the longevity of AI systems. Recent techniques, including model architectures like dynamic survival networks, now accommodate time-varying covariates and complex, nonlinear hazard functions, offering a far more nuanced and realistic depiction of AI lifecycle risks.

Just as crucial are strides in model interpretability. Tools like median-SHAP have introduced much-needed transparency into survival models, helping practitioners and decision makers alike identify the most influential factors shaping an AI system’s projected lifespan. This interpretability is a necessity for responsible AI governance and informed risk management.

By reframing AI lifecycle management through the lens of survival analysis, organizations gain a structured, quantitative approach to understanding and mitigating AI asset risk.

Applying Survival Analysis to AI Assets

The first step in applying survival analysis to an AI asset is defining an appropriate “failure” or “degradation” event for the system in question. Unlike mechanical systems, in which failure may be binary (operational versus nonoperational), AI assets tend to degrade along a continuum of performance. Organizations must therefore establish performance thresholds below which a model is no longer fit for purpose. For example, a financial fraud-detection model might set a minimum acceptable precision-recall value; a healthcare diagnostic model may establish clinical-accuracy benchmarks.

Once the target metric and threshold are defined, survival curves can be estimated using historical model performance data, operational monitoring logs, and contextual factors. For narrow or highly specialized models, however, relevant historical data may be limited. In such cases, organizations often rely on internal benchmarking against similar past deployments or generate synthetic datasets to simulate expected performance trends. Recent methods such as Cox-Time, DeepHit, and dynamic survival networks enable modeling of static and time-varying covariates, capturing how both internal attributes and external shocks influence survival probabilities.10,11

Early-warning signs can be derived from survival curves by identifying inflection points where the estimated probability of maintaining acceptable performance declines rapidly. Such signals can inform proactive governance interventions.

Integrating Survival Analysis into AI Governance

A major strength of survival modeling is its compatibility with emerging AI governance frameworks. For example, researchers have proposed an hourglass governance model that embeds accountability throughout the AI lifecycle.12 Although the hourglass model emphasizes continuity and lifecycle control, it is not always possible to recover a degrading model. In some cases (particularly when architectural constraints or external paradigm shifts are too great), full model replacement or redevelopment may be required. Survival curves provide quantitative input for this process, supporting lifecycle budgeting, model monitoring, and retirement planning.

In practical terms, survival-informed governance can:

-

Trigger adaptive maintenance cycles — like retraining before critical degradation occurs

-

Support risk-adjusted ROI calculations — factoring in expected asset lifespan

-

Inform compliance strategies — ensuring AI systems remain aligned with evolving regulatory standards

-

Prioritize model-refresh investments — focusing resources on high-risk assets

Moreover, survival-informed metrics can be incorporated into service-level agreements (SLAs) and internal audit processes, creating a more robust accountability structure.13

Limits of Modeling: Transparency & Realism

It is important to acknowledge that survival models are not crystal balls. Indeed, the modeling of AI asset survival cannot fully account for all externalities or emergent factors. Technological paradigm shifts (e.g., the rapid rise of generative AI) and abrupt regulatory changes are likely to outpace any predictive model.

To address this, survival analysis should be used as a strategic tool for directional insight, not as a deterministic forecast. Note that recent advances in explainability enable business leaders to interrogate survival models — understanding which factors are driving risk and where uncertainties lie.14

Organizations should also combine survival modeling with robust scenario planning, using model outputs as one input among many in strategic decision-making.

Lessons from AI Asset Obsolescence

The rapid pace of innovation and the volatility of technological ecosystems have already produced high-profile examples of model obsolescence. The following examples illustrate a key pattern: the models in question did not degrade internally; they lost relevance relative to newer, more powerful alternatives. This form of relative degradation is especially common in fast-moving domains. Later, we briefly discuss intrinsic degradation, in which models decay due to internal factors like data drift or architectural limits.

OpenAI

Few companies have moved faster than OpenAI. In 2020, OpenAI released GPT-3, a language model that quickly became a benchmark for natural language processing (NLP) performance. Organizations rushed to build applications on top of GPT-3, embedding it into chatbots, content generation tools, and customer service platforms.

Within three years, GPT-4 and GPT-4o were released, each demonstrating significant performance gains, broader capabilities, and improved safety protocols. The improvements rendered many GPT-3-based applications obsolete (no longer competitive in markets increasingly expecting the sophistication of newer models). Companies that had deeply integrated GPT-3 faced the costly decision of whether to migrate, retrain, or rebuild their AI infrastructure.

The degradation was not due to internal technical failure but to the external benchmark shifting upward. From a survival modeling perspective, this reflects a competitive displacement risk: the survival curve steepens not because the asset degrades intrinsically, but because the environment around it changes.

Organizations employing survival analysis could have anticipated this risk by monitoring indicators such as:

-

Frequency and scale of major model updates from key AI vendors

-

Industry benchmarking reports signaling performance-expectation shifts

-

Acceleration in model capability metrics (e.g., parameters, context windows, multimodal abilities)

Proactive monitoring would have supported earlier budget allocation for migration and reduced business disruption.

The Rise of Foundation Models

In the mid-2010s, VGGNet was widely regarded as a top-performing deep convolutional neural network for image-recognition tasks. Organizations, particularly those in healthcare imaging and autonomous driving, invested heavily in VGGNet-based architectures.

However, the advent of ResNet (residual neural network) and later transformer-based vision models (e.g., vision transformers) dramatically shifted the performance frontier. ResNet introduced innovative skip connections that solved degradation problems in deep networks, achieving higher accuracy with more manageable training dynamics. Vision transformers brought further gains by capturing long-range dependencies more effectively.

The result was a structural obsolescence of VGGNet models, despite them remaining operational. Organizations that failed to adapt faced competitive disadvantages, with models delivering inferior performance, longer inference times, and/or higher error rates relative to newer architectures. This effect was especially pronounced in highly regulated sectors like healthcare and autonomous driving, where evolving safety, accuracy, and explainability standards pushed organizations to adopt models aligned with the latest technical and compliance benchmarks.

Survival analysis frameworks could have flagged early-warning signs such as:

-

Emerging research momentum around alternative architectures (publication trends)

-

Shifts in benchmarking leaderboards (e.g., ImageNet)

-

Performance degradation relative to industry best practices

Incorporating these signals into survival curves would have allowed for more strategic refresh planning, enabling a smoother transition to next-generation architectures.

The OpenAI and VGGNet cases highlight relative obsolescence, but intrinsic degradation is also a significant concern. For example, a predictive maintenance model in manufacturing may deteriorate over time due to cumulative data drift, aging sensor hardware, or repeated deployment without proper recalibration. In such cases, performance may decline even in the absence of superior external models, underscoring the need for robust internal monitoring.

In all cases, the question of AI system longevity extends well beyond technical performance. It is deeply connected to external ecosystem shifts, evolving competitive pressures, and the relentless pace of technological change. In the absence of structured monitoring and predictive frameworks, organizations are left vulnerable to sudden system failures, costly reengineering efforts, erosion of market position, and/or reputational setbacks.

Conversely, firms that integrate survival analysis into their AI governance protocols gain a forward-looking advantage. By identifying early signals of obsolescence and planning transitions accordingly, they safeguard their existing AI investments while strengthening their capacity to adapt. In doing so, they are better positioned to harness the next wave of technological innovation with agility and confidence.

Implications for AI Governance & Business Strategy

Integrating survival analysis into AI lifecycle management is a strategic shift that extends beyond technical oversight into the domains of corporate governance, enterprise risk, and long-term business planning. It marks a departure from the prevailing set-it-and-forget-it mentality, introducing a more anticipatory and responsible approach to AI asset stewardship. This section shows how survival modeling can serve as a foundation for strengthening governance, enhancing strategic foresight, and embedding accountability across the AI value chain.

Survival Analysis as a Governance Tool

A growing body of research about AI governance highlights the need for continuous, lifecycle-aware oversight, and survival analysis provides this.15,16 Survival curves offer an integrative lens that facilitates shared understanding across data science, compliance, enterprise risk, and corporate strategy.

Areas where survival modeling can be embedded include:

-

Lifecycle budgeting. Survival forecasts support more accurate planning for retraining and model updates, helping CFOs align financial decisions with AI system needs.

-

ROI adjustment. Unlike static ROI models, survival-informed analysis accounts for performance decay, enabling more realistic and risk-aware investment decisions.

-

Regulatory compliance. With regulators demanding ongoing validation (e.g., EU AI Act), survival analysis offers a structured way to demonstrate long-term model reliability.

In essence, survival curves function as a “health record” for AI assets — capturing performance over time and surfacing unexpected deviations that can trigger reexamination of assumptions, deployment context, or model design. When embedded into governance and strategic-planning processes, this approach transforms AI from a static capital expense into an actively managed enterprise asset.

Forecasting Risk & Prioritizing Investments

Survival-informed governance can also create strategic advantage. In fast-moving industries, the ability to anticipate when AI assets will lose competitiveness (and plan transitions accordingly) can determine market leadership.

For example, firms that monitored survival indicators in NLP models during the rapid evolution from GPT-3 to GPT-4 were better positioned to manage customer expectations, allocate resources for migration, and avoid reputational risks from outdated offerings. Similarly, proactive lifecycle management in computer vision has enabled leading healthcare firms to maintain clinical accuracy levels by adopting newer models that redefined performance standards as imaging modalities and model architectures emerged.

By aggregating survival curves across AI assets, organizations can:

-

Prioritize high-risk models for early intervention

-

Identify robust models suitable for long-term deployment

-

Balance investment between innovation (new models) and sustainability (maintaining existing assets)

Such portfolio insights support adaptive, resilient AI strategies that are aligned with both business goals and ethical governance imperatives.

Creating Accountable AI

Survival-informed governance also strengthens accountability by making AI system risks more transparent to both internal and external stakeholders. Studies have shown that transparency about AI performance over time is essential to maintaining trust with users, regulators, and society.17,18

Survival curves, particularly when enhanced with explainable AI techniques, can make degradation risks visible not only to technical teams but also to business leaders and external stakeholders. This fosters more informed dialogue about acceptable risk thresholds, update cadences, and end-of-life planning for AI systems.

In this way, survival analysis is not merely a technical enhancement. It is a governance innovation that helps organizations operationalize the principles of accountability, transparency, and continuous oversight that are central to the emerging global consensus on responsible AI.

Limitations & Future Directions

Survival analysis offers a powerful framework for estimating the lifespan of AI assets, but it is important to recognize its inherent limitations. No statistical method, regardless of its complexity, can entirely account for the nuanced, evolving nature of real-world AI environments. Openly acknowledging these constraints preserves analytical integrity and encourages responsible integration of such tools into governance practices.

Modeling Boundaries & Unpredictable Externalities

One challenge lies in the modeling of externalities. Technological paradigm shifts, abrupt regulatory interventions, and sudden market disruptions may introduce discontinuities that survival models, trained on historical data, cannot fully anticipate. For instance, few survival curves could have predicted the sudden leap in NLP capability delivered by large-scale foundation models in 2023/2024 or the accelerated emergence of multimodal AI capabilities.

Moreover, survival models depend on well-defined degradation signals. In domains where such signals are difficult to quantify (or where performance metrics are subjective), modeling reliability may be constrained. Recent studies highlight key limitations in survival modeling, including difficulty calibrating time-to-event predictions, managing censored observations, and accounting for nonlinear degradation patterns under real-world constraints.19,20

Survival analysis frameworks are sensitive to feature selection and context. Factors driving AI asset survival may vary dramatically across industries, regulatory regimes, and organizational maturity levels. Models must therefore be tailored with domain-specific expertise and regularly validated against evolving benchmarks.

Future Research Directions

Recognizing these limitations opens several promising avenues for future development:

-

Dynamic survival modeling. The integration of time-varying covariates and longitudinal monitoring offers a pathway to more responsive survival models that are better suited to environments characterized by rapid change.

-

Explainability and transparency. Continued advances in interpretable survival modeling will be critical to ensuring that survival analysis can support accountable governance, providing not only risk forecasts but also insight into why certain risks are emerging.21

-

Governance integration. Many governance frameworks still lack mechanisms for tracking long-term asset degradation.22 Future work should explore how survival-informed monitoring can be more deeply embedded into governance processes, SLAs, and regulatory reporting.

-

Ethical alignment. This is a complex topic requiring careful, dedicated treatment that cannot be included here due to space constraints. As a first step, survival-informed governance can contribute to ethical AI leadership by enhancing transparency and accountability (i.e., providing stakeholders with clearer insights into the risks and limitations of AI systems over time). A fuller exploration of the societal impacts of AI longevity remains an important area for future research.

Survival analysis should be viewed not as a panacea, but as a valuable addition to the AI governance tool kit. Its adoption must be accompanied by critical reflection, transparent communication, and adaptive learning. By doing so, organizations can responsibly harness survival analysis’s strengths while remaining mindful of its boundaries.

Conclusion

In an era when AI increasingly underpins critical business functions, the question of AI asset longevity is urgent. Unfortunately, most organizations lack a structured, predictive approach to managing AI lifecycle risks. Without such tools, they risk financial loss, operational disruption, compliance failure, and diminished competitiveness.

Survival analysis offers a practical and powerful way to address this gap. By modeling AI systems as dynamic assets subject to degradation and external shocks, survival analysis enables business leaders to:

-

Forecast performance risks over time

-

Identify early signs of obsolescence

-

Align governance and budgeting with realistic lifecycle expectations

-

Embed accountability and transparency into AI stewardship

Crucially, this approach supports a shift from reactive to proactive AI governance, a transition that is essential as regulatory expectations grow and technological change accelerates.

However, survival modeling is not a definitive predictor. It must be applied with transparency, domain sensitivity, and a recognition of its boundaries. Used appropriately, it complements other governance tools, enhancing both strategic planning and responsible leadership.

Ultimately, understanding AI survival is an accountability imperative. Organizations that embrace this mindset will protect their AI investments and foster trustworthy AI ecosystems that are better aligned with the expectations of customers, regulators, and society.

References

1 Vela, Daniel, et al. “Temporal Quality Degradation in AI Models.” Scientific Reports, Vol. 12, July 2022.

2 Chen, Pin-Yu, and Payel Das. “AI Maintenance: A Robustness Perspective.” Computer, Vol. 56, No. 2, February 2023.

3 Mäntymäki, Matti, et al. “Putting AI Ethics into Practice: The Hourglass Model of Organizational AI Governance.” arXiv preprint, 31 January 2023.

4 Batool, Amna, Didar Zowghi, and Muneera Bano. “AI Governance: A Systematic Literature Review.” AI and Ethics, Vol. 5, January 2025.

5 Xue, Jingyuan, et al. “Survival Analysis with Machine Learning for Predicting Li-ion Battery Remaining Useful Life.” arXiv preprint, 6 May 2025.

6 Bayram, Firas, Bestoun S. Ahmed, and Andreas Kassler. “From Concept Drift to Model Degradation: An Overview on Performance-Aware Drift Detectors.” Knowledge-Based Systems, Vol. 245, June 2022.

7 Vela et al. (see 1).

8 Chen and Das (see 2).

9 Mäntymäki et al. (see 3).

10 Xue et al. (see 5).

11 Mesinovic, Munib, Peter Watkinson, and Tingting Zhu. “DySurv: Dynamic Deep Learning Model for Survival Analysis with Conditional Variational Inference.” Journal of the American Medical Informatics Association (JAMIA), 21 November 2024.

12 Mäntymäki et al. (see 3).

13 Batool et al. (see 4).

14 Mesinovic et al. (see 11).

15 Mäntymäki et al. (see 3).

16 Batool et al. (see 4).

17 Mesinovic et al. (see 11).

18 Chen and Das (see 2).

19 Wiegrebe, Simon, et al. “Deep Learning for Survival Analysis: A Review.” Artificial Intelligence Review, Vol. 57, No. 65, February 2024.

20 Mesinovic et al. (see 11).

21 Mesinovic et al. (see 11).

22 Batool et al. (see 4).