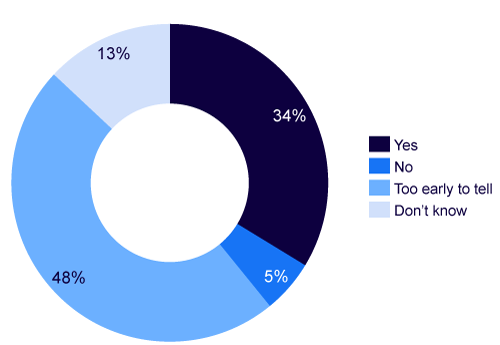

In a recent survey, Cutter Consortium examined how organizations are adopting generative artificial intelligence (GAI), its possible impacts on their businesses, and the key issues they are encountering when adopting the technology. Based on our survey findings, approximately one-third (34%) of organizations currently indicate they plan to integrate large language models (LLMs) into their own applications (see Figure 1). This is an impressive rate of adoption, especially considering that most organizations — outside of the major tech companies — have little to no experience working with LLMs.

Strong interest in LLMs is attributed to the fact that they have proven to increase the accuracy of natural language processing (NLP) systems. Additionally, the general availability of LLMs, including open source versions, is enabling enterprises, commercial developers, and entrepreneurs to build systems that can perform much more sophisticated NLP tasks.

But issues exist that are causing some companies to pause when it comes to deploying LLMs in production environments. The first issue involves training. Depending on the application, many organizations will need to train their LLMs using their own data to achieve the accuracy requirements and avoid the potential for the hallucinations, biases, and other inconsistencies that have hampered more consumer-oriented language model implementations (e.g., ChatGPT).

This is particularly true when it comes to using LLMs for developing customer-facing applications such as chatbots, conversational interfaces, and intelligent assistants/self-service customer-assist systems (i.e., basically automated applications where the output is not first screened for accuracy and correctness by a customer service rep or other some other human).

The question of the performance of LLMs degrading over time is another concern. This issue came to light from a study published by researchers from Stanford University and University of California, Berkeley, claiming that the performance of OpenAI’s LLMs has decreased over time.

LLMs like GPT-3 and GPT-4 can be updated in various ways, including by being exposed to user feedback during their normal course of use and by developers making changes to their designs. But, according to the researchers, when and how these models are updated is not sufficiently clear. Moreover, it is also unclear how each update actually affects the behavior of these LLMs. The researchers add that these unknowns can make it challenging to stably integrate LLMs into larger workflows.

The research team sought to determine if the models were improving over time in response to updating. To do so, they evaluated the behavior of the March 2023 and June 2023 versions of GPT-3.5 and GPT-4 on several tasks. These included solving math problems, answering sensitive/dangerous questions, answering opinion surveys, answering multi-hop knowledge-intensive questions (i.e., questions requiring a complex reasoning process spanning multiple sentences and answers), generating code, answering questions from US medical license exams, and performing visual reasoning.

Researchers claim that the performance and behavior of both GPT-3.5 and GPT-4 varied significantly across these two releases and that their performance on some tasks became substantially worse over time, while they improved on other problems. Basically, the research indicates the need for organizations to continuously monitor and evaluate the behavior of LLMs in production applications. Due to these and other issues, many organizations — nearly half (48%) according to our survey — are still unsure about their plans for using LLMs, basically taking a wait-and-see approach.

That said, we expect to see LLMs integrated into a broad range of proprietary enterprise applications and commercial enterprise software products — everything from programming and application development tools to customer service, personalization platforms, business intelligence, data integration and access tools, and cybersecurity environments. This is happening at a considerable pace today.

I’d like to get your opinion on the use of LLMs with enterprise applications. As always, your comments will be held in strict confidence. You can email me at experts@cutter.com or call +1 510 356 7299 with your comments.