Digital twins are becoming more commonplace in the defense industry, including one for solid-state radar developed by a US Department of Defense (DoD) contractor to assess high-fidelity search-and-track radar-performance metrics. The metrics are comparable to the deployed system the digital twin represents (referred to here as the “end item”), reducing testing costs for the contractor and DoD.

Cost savings from the radar digital twin (RDT) stem from the ability to digitally assess end item software, verify system-level requirements, support pre-mission analysis and events without hardware, and conduct virtual warfighter training and exercises.

Millions of dollars have been saved from these activities, savings that increase year after year and can be reinvested into product development. Expansion and adoption of these types of digital twins are expected to support additional cost savings and faster product delivery as they evolve (see Figure 1).

RDT model development costs less than traditional modeling and simulation (M&S). RDT’s use of wrapped tactical code (WTC) methodology is also an advantage: it keeps development in lockstep with the development of the end item system. (Note that “tactical code” refers to the end item software.)

RDT provides various configurations to support multiple use cases. Its interface is identical to the end item radar system, so it supports interoperability with defense systems (DS) models that adhere to the end item interfaces. RDT supports a high-fidelity, non-real-time configuration. This configuration is used for singleton radar simulations (using parallelized Monte Carlo simulations) and integration into traditional modeling and simulation.

RDT also supports a medium-fidelity, real-time configuration. This configuration is used for pairwise testing with other DS models and as substitute for the end item radar in interface-level testing and assessments. The digital twin’s operators include the prime radar contractor, sister contractors that develop DS models, and warfighters (primarily pilots and sailors).

A deployed radar system requires multiple cabinets of equipment to keep up with demanding real-time requirements. RDT’s hardware requirements are lower because they’re based on the computing resources needed for the deployed radar systems minus the resources needed for redundancy and processes not required in the twin, such as calibration. This allows RDT to be run in both a real-time and a non-real-time configuration on a single computer with a single software build.

This configuration can be scaled to execute multiple instances of the RDT and run in parallel in a clustered or cloud computing environment to allow for Monte Carlo statistical variation and support high volumes of data. This data is used to assess the radar performance across hundreds or thousands of simulations. Note that RDT is platform-agnostic; it can be run on any machine that meets RDT’s minimum hardware requirements. Cloud computing solutions are the most popular choice for running RDT.

RDT’s Modular Open Systems Approach (MOSA) architecture allows for the construction of additional digital twins. The additional twins represent variations of the radar system with little changes to source material. Various radar components can be swapped depending on the configuration the user wants to test. Components of RDT are also swappable with traditional M&S components.

This blend of end item–deployed code and M&S models facilitates issue isolation and identification in both models. The end item–deployed code uses the same input as a system integration lab or test environment, so issues identified in fielded configurations can be easily tested with RDT.

In addition, RDT outputs the same data as the deployed system, due to the WTC methodology. This allows the same debugging tools, strategies, and procedures developed for the deployed radar system to be used with RDT, supporting reuse.

RDT provides quick assessment of the end item–deployed software in a simulated environment. Since RDT uses WTC methodology, the performance of the end item system, including its features and bugs, are present within RDT. RDT builds are produced in lockstep with the deployed system.

RDT’s ability to mirror the behavior of the deployed system has been a leading driver of ROI. For example, RDT is capable of being deployed at the same time or sooner than builds that make it to the laboratory floor. RDT loads are regression tested with the same inputs as the builds in the deployed configuration. Crashes, software bugs, and radar-performance issues associated with these loads are thus identified earlier in the test cycle for the end item system. Early identification means issues can be resolved with less effort (versus needing to staff a laboratory of engineers to run the same inputs as RDT).

Issue identification using RDT is more sophisticated than identifying problems in single-input situations. Radar performance metrics require much more than one test in the laboratory to assess. Typical test input in the end item configuration runs for dozens of minutes and requires multiple engineers to process the data. This process is often repeated to get a larger data sample size.

RDT’s parallelization feature allows hundreds, or thousands, of these simulations to be done in less time versus the end item system on the order of 100x less. Since RDT exists in a digital-only environment, it can easily be paired with automation. The output of these parallelized simulations can be fed into analysis scripts that assess key radar metrics across the distribution of data.

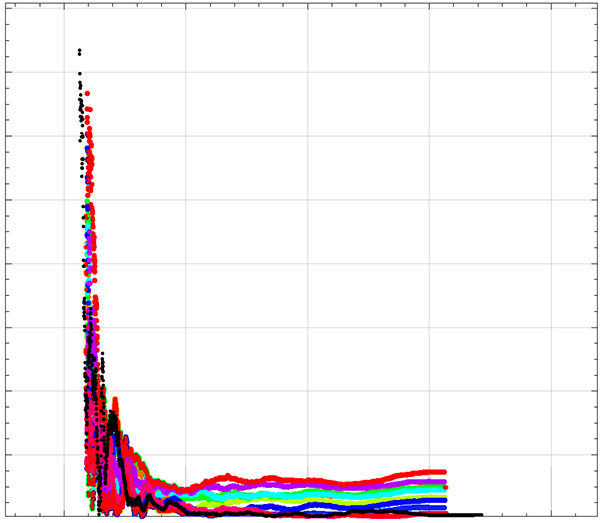

This led to the discovery of harder-to-find edge-case issues in the end item software, saving thousands of person-hours. Figure 2 shows RDT Monte Carlo simulation output of RDT for low-level radar-performance metrics. The colored curves are 10 individual simulations, run in the clustered cloud computing configuration, and the black curve is reference data from the end item radar system. The figure shows how closely the end item radar system tracks with the RDT.

[For more from the author on this topic, see: “Digital Twins & the Defense Industry’s Digital Transformation.”]