CUTTER BUSINESS TECHNOLOGY JOURNAL VOL. 29, NO. 10

This issue of Cutter Business Technology Journal (CBTJ) is focused on cognitive computing. Cognitive computing is a term that is similar to, but currently more popular than, artificial intelligence (AI), and it refers to all those innovations in computing that are being driven by various types of AI research.

At the moment, cognitive computing applications can provide superior interfaces that allow computer systems to interact with customers or employees, with the Internet or massive databases, or with computer chips embedded in almost any item you might want to track. The applications, once trained, can talk with people using ordinary language, ask questions, and then provide answers or recommendations. Similarly, once chips are embedded in parts or products, those products can be tracked in real time and become part of the way you understand your supply chain or inventory. Cognitive applications are good at rapid searches of vast amounts of data. In many cases, the data can be in an unstructured format; cognitive applications can monitor TV news feeds, parse email exchanges, scan websites, or read documents, magazines, and journals in order to gain up-to-date information. In the most dynamic cases, battlefield simulation systems can employ satellites to track embedded chips in tanks, trucks, ships, or even military personnel and can use that data to create maps that tell battlefield commanders exactly where their resources are at any given moment.

In addition to simply identifying facts, cognitive systems can use deep neural networks to analyze huge amounts of data quickly, identify developing patterns, and predict or project what is likely to happen in the near future. Moreover, as cognitive systems are used, they learn and improve the quality of their predictions.

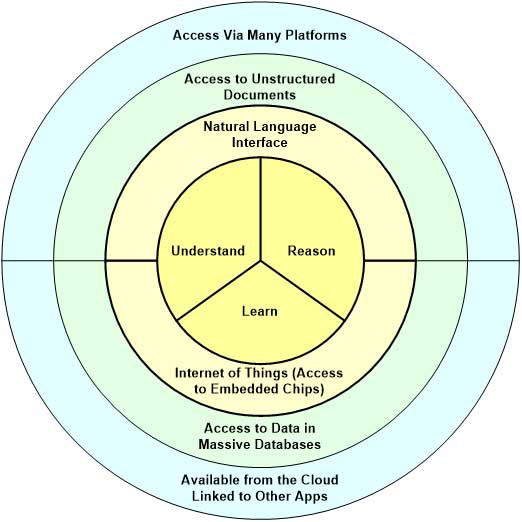

IBM summarizes all this by saying that cognitive computing involves understanding, reasoning, and learning. I would add two front-end capabilities: natural language interfaces and the Internet of Things. Taken together, these elements provide a pretty complete description of cognitive computing.

Figure 1 gives an overview of cognitive capabilities and shows some of the other closely related capabilities that business people would want to consider when thinking about how they might use cognitive computing to improve existing business processes or to transform the way their company does business.

The core capabilities of cognitive computing:

-

To understand data of all kinds and answer user questions

-

To reason about things and reach conclusions or make predictions

-

To learn as new information becomes available or as the cognitive application gains experience

Figure 1 — A target diagram of cognitive computing and associated technologies.

Closely related capabilities include natural language interfaces that make it possible for customers and/or employees to interact with the application using ordinary language and the ability of an application to monitor hundreds or thousands of sensors embedded in the environment to understand minute-by-minute changes in where things are or what is happening.

Less exclusive to cognitive applications (but still of vital importance in understanding cognitive computing) is their ability to “read” unstructured data of all kinds, ranging from phone calls and emails to magazines and technical journals, and to mine massive databases to understand the data and to extract patterns from it.

Finally, one must keep in mind that cognitive computing is available from the cloud and is capable of being made available on nearly any media that people use. Thus, we can field cognitive applications on smartphones, via automobile dashboards, or on digital assistants. We can also make cognitive applications available via kiosks or from chips embedded in a myriad of devices. Imagine an office machine that can talk with its user and help diagnose whatever problems the user may encounter. In the near future, if we desire, almost any artifact will be able to talk with us and help us reason about the problems we face.

Earlier this year, Cutter published an Executive Report that provided some broad overviews of cognitive computing. This issue of CBTJ provides something different — reports on specific uses of cognitive technology. The authors in this issue are more narrowly focused on specific aspects of cognitive computing, but we think you will find that they provide some good insights into key facets of this rapidly evolving technology.

Automating Software Development

Donald Reifer kicks off our issue with an article on the use of cognitive computing in software development. We all know that the automation of software development has been a goal of software development theorists for several decades; after all, computer-aided software engineering (CASE) was popular back in the late 1980s and early 1990s. Using software tools that drew on then-popular AI techniques, developers hoped to offload much of the day-to-day hassles involved in creating new software applications. Unfortunately, CASE products were launched just as software was transitioning from being mainframe-based to workstation-based and as new operating systems and languages such as Unix and C were being introduced. The details, in hindsight, aren’t important. Suffice it to say that software development was becoming much more complex, just as tools to develop software for simpler environments were emerging. Some of the ideas introduced with CASE have proved useful — CASE tools served, for example, as a foundation for business process modeling tools in subsequent years — but the overall goal of generating programmer-free software proved unattainable.

In recent years, those using AI to improve software development have focused on creating specialized tools that can aid developers in specific tasks. By combining software development capabilities with an ability to dialog with developers, the new tools are making the development process easier. Indeed, Reifer believes that in five years’ time, cognitive computing approaches will enable machines to autonomously perform many of the intellectual tasks of software development, and he offers three scenarios for which this might be the case: software requirements definition, test automation, and “roll your own release.”

Creating Applications With Conversational Interfaces

Another use of cognitive computing has been the development of natural language interfaces for software systems. Once again this has been a long-time goal of AI developers. Some of the first DARPA (Defense Advanced Research Projects Agency) AI projects were aimed at developing software that could translate Russian scientific articles into English — a goal that proved to be beyond the capabilities of either computers or natural language theories in the 1980s. Progress since then has been steady and has grown right along with the growth in computing power. We were all briefly thrilled when Apple announced that its iPhone would include an entity, Siri, that would be able to understand our questions and provide us with answers. Few who have tried to do much with Siri have been satisfied, but it certainly indicated that there is a market for spoken software applications, and Microsoft and others have come out with their own versions of talking assistants. In a similar vein, many of us were impressed in 2011 as we watched IBM’s Watson system defeat several human Jeopardy! champions, announcing its answers just like a human contestant.

Aravind Ajad Yarra gives us his own description of the history of natural language interface development and goes on to consider what kind of architecture is required to support conversational interfaces. Without getting into coding details, Yarra provides a good overview of the challenges developers will face as they seek to develop conversational interfaces for business applications.

Teaching Computers

Next, Karolina Marzantowicz focuses on how cognitive computing systems learn. When AI was commercially popular in the 1980s, the approach used at that time didn’t allow for learning. It took human developers quite a while to initially provide the knowledge needed to support cognitive activities, and it then required even more time to revise the knowledge as it changed. The maintenance problem proved so difficult that interest in the development of expert systems gradually died. Today’s renewed interest in cognitive computing is predicated on the use of new technology that has overcome the problems we faced back then. Today’s cognitive applications can learn and improve themselves as they are used. In her article, Marzantowicz explores how computers learn. It turns out that teaching a cognitive application is rather like mentoring a new associate: setting tests, offering hints, and doling out lots of reinforcement as the application learns more and provides better answers. Marzantowicz discusses some of the different techniques required for acquiring basic knowledge, for learning a natural language, and for learning to make sense of images.

Helping Managers Manage

Finally, Robert Ogilvie takes a broader look at some of the themes already developed and considers the likely role of cognitive computing in the near future. He suggests that automation has gone through three phases: a robotic phase, in which computers performed rote tasks; a social phase, in which computers have facilitated communication and collaboration; and a cognitive phase, in which computers are learning to use knowledge to solve intellectual problems. He alludes that most of the new cognitive systems will interact with and aid human performers. Rather than replace human managers, Ogilvie implies, cognitive computing applications will make existing managers more knowledgeable and better able to respond to a broader range of challenges. He goes on to offer an inspiring vision of cognitive computing applications that help individuals and teams focus their attention on the challenging problems that are really worthy of consideration.

Conclusion

Surveys suggest that most companies are just beginning to explore the use of cognitive computing. At the same time, however, the technology has racked up some impressive results. Moreover, most large companies still have people with AI experience from their past efforts, and such firms will be able to move quickly once they make a decision to commit to this approach. With IBM’s strong backing, I am confident that automated intelligence will emerge as a dominant trend in the coming decade. Now is the time to study, learn, and experiment. Now is the time to start thinking about how to transform your organization with cognitive computing.