CUTTER IT JOURNAL VOL. 29, NO. 7

While the explosion of the Internet of Things (IoT) will present security risks in consumer-oriented applications such as smart houses or health monitoring, the consequences pale in comparison to the damage that can be inflicted, whether accidentally or intentionally, on critical infrastructures such as the electric grid, oilfields and refineries, smart cities, transportation systems, or nuclear plants. The ability to interconnect systems and the desire to make data available remotely for operational and analytical purposes have outpaced the implementation of appropriate security measures.

In this article, I provide examples of the risks and a discussion of the methods available for mutual identification, authorization, and access control between IoT devices and control systems, as well as protection of data and commands as they cross the network. I will also discuss how policies and risk management, not just the technology, need to be components of the overall approach.

IIoT vs. IoT

When we talk about the Industrial Internet of Things (IIoT, or Industrial Internet for short), we imply that the devices, and what we do with them and the information they supply, serve the purpose of an industrial actor, rather than that of a consumer. So we’re not signaling to a person, based on sensing their jogging pattern, that it is time to stop and drink water, or adjusting a household thermostat based on detecting that the owner is 10 minutes away from home after their day at work. Instead, we’re automating or recording something about a factory or other industrial environment and making decisions that improve how this environment performs. The Industrial Internet Consortium (IIC), the most active nonprofit membership-based organization in this domain, defines the IIoT as “the convergence of machines and intelligent data,” or “an Internet of things, machines, computers, and people enabling intelligent industrial operations using advanced data analytics for transformational business outcomes.”

Note, in passing, that the last part of the sentence (“enabling…”) distinguishes the Industrial Internet from its predecessor, Supervisory Control and Data Acquisition (SCADA). SCADA systems are usually working in closed circuit, reacting in real time without any longer-term analysis of the data they capture, which they often do not even store.

The boundary between the consumer-oriented IoT and the Industrial Internet is not sharp. Certain applications overlap or form a continuum of solutions that can be viewed from the perspective of an individual user or from that of an industrial stakeholder. For example, a smart home can function in a closed loop for the benefit of its residents, but if the data from the home devices is sent to the electric company, it can help it optimize the delivery of electricity and decrease the cost of peak generation. There are similar examples of overlap in areas such as remote patient monitoring, smart cities, water management, smart cars, and more.

The distinction between the consumer and industrial use of the IoT approach is not so much in the types of devices used, or the nature of the analytics performed on the data, as it is in the objectives of the system. The consumer IoT is generally aimed at providing better personal services, such as a more comfortable home with lower energy bills, a faster route to one’s destination, or an alert that your prescription refill is ready when you are driving near your pharmacy. The main objectives of the Industrial Internet are things such as:

- Preventing outages and failures

- Decreasing energy usage in a factory

- Scheduling equipment maintenance based on actual usage, not on a fixed cycle time

- Optimizing the routing of shipments based on traffic conditions

- Tracking expensive assets to optimize their allocation

- Surveilling unmanned locations

- Detecting leaks and other hazards

- Improving safety through personnel monitoring

For example, Marathon Oil, in collaboration with Accenture, has equipped a refinery with gas detectors that help create a real-time map of hazards in the control room. Operators wear connected devices that track their location and movement, alert them when they approach a dangerous zone (based on gas level sensors), and send an alert if they stop moving. Incidents are automatically logged. This deployment has resulted not only in safety improvements, but also in greater regulatory compliance and better observance of safety rules by personnel, who know that their movements are being recorded and that this is to their benefit.

Security Challenges in the IIoT

It is hardly necessary to explain or justify that security is a concern when we think of applying IoT technology to industrial applications, but it is useful to consider how it differs in this context from the consumer domain. In the consumer-oriented IoT, some of the main threats might be:

- Stealing personal information, such as credit card numbers

- Finding out when a house is unoccupied (e.g., by remotely observing the thermostat settings or the energy consumption) in order to plan a robbery

- Tracking delivery trucks in order to steal shipments left at the doors of unoccupied homes

- Tracking the movements of people (e.g., if they drive in a connected car) for kidnapping purposes — a problem endemic to certain developing countries

- Nuisance actions, such as setting all the thermostats in a neighborhood to the wrong temperature as a misguided joke or as a “badge of honor” for hackers

- Capturing a surveillance camera feed for intimidation, blackmail, or the like

Done on a small scale, these actions are petty crimes. Done on a large scale by an organized agent, in an area where there are many connected homes and cars, they could serve to create panic — a form of psychological attack for economic or ideological purposes.

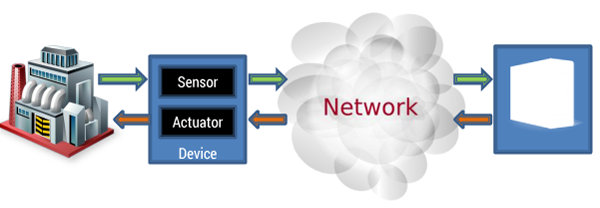

In the industrial world, the risks are generally different and present immediate danger on a larger scale. Figure 1 is an oversimplified view of the components that an Industrial Internet system connects. (Note that the “actuator” part of a device is not always present. Many devices in an IIoT network — and sometimes all of them — are passive sensors.)

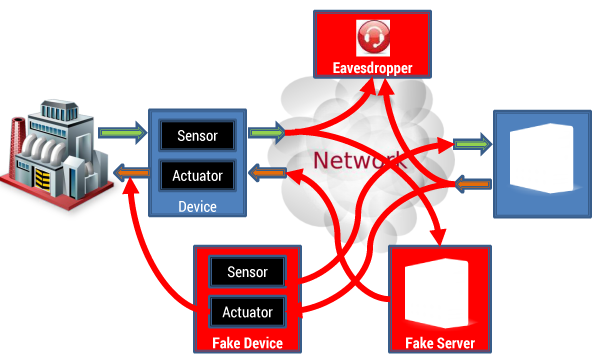

Figure 2, by contrast, adds to this diagram the various ways in which attacks can be performed on such a system. This diagram shows three forms of attack, against which most current systems are woefully unprotected:

- Eavesdropping. This is the least disruptive form of attack, because intercepting traffic between a device and a control system or an analytics application does not directly impact its function. Unfortunately, this also means that it is the type of intrusion that is the least likely to be detected. The goal of the listener will often be industrial spying, or it can be government-sponsored monitoring of economic activity. The goal may also be monetary. By monitoring the information that traverses the IIoT network, a third party may be able to predict certain fluctuations in commodity or stock prices and make money from knowing about impending events ahead of the market. Or it can monetize the information by selling it to an unscrupulous competitor. There is also the increasing occurrence of “ransomware”: the intruder accesses enough information to prove to the owner of the system that it contains serious vulnerabilities and then demands a large sum of money to cease the attack or provide remediation information.

- Device masquerading. Inserting a fake device into the network is actually fairly easy in most cases, because enrolling a device in the system is usually a very primitive process. Simply by virtue of physically connecting the device to the network, it can start receiving and sending data. Since sensing devices may be manufactured by the tens or hundreds of thousands, they are fairly easy to procure from legitimate manufacturers. This form of intrusion can result in several distinct (and non-exclusive) consequences:

- The fake sensor can inject false data into the network to cause erroneous reactions or interpretations. These may include false alarms (i.e., sending data that appears to indicate a malfunction when there is none, which may trigger a disruptive shutdown), or data that skews the analysis of what is going on in the physical world (e.g., reporting higher or lower values of a key measurement) so that the control system sends commands that affect production or create dangerous conditions.

- A fake device that includes an active component can receive information and intentionally perform actions that are not what is necessary under the circumstances, such as increasing the speed of a motor instead of decreasing it, turning a monitoring light green when it was supposed to be red, and so on.

- A “denial of service” attack can be performed by a fake device to overwhelm the network with messages, preventing normal operations. At that point, if the industrial system’s design is fail-safe, it may shut down safely, but if not, the consequence can be an accident.

- Server masquerading. Especially when the IIoT network uses the cloud rather than a private network to connect devices to servers, it is possible to insert a fake server into the network. As long as that machine is able to discover the addresses of the devices on the network and “speaks” the same communication protocol as the devices, it could send them requests or commands. IIoT networks are not well protected against this form of attack because the devices rarely have the logic (or the hardware and software capability) to authenticate the servers that are talking to them. Most devices will simply respond to well-formed requests or commands without verifying that the originating machine is legitimate (IP addresses can be spoofed). With this form of attack, it is even easier to cause sensors to send their data to an unauthorized recipient or cause active devices to shut down a machine or a valve — or reopen it in a destructive manner after a legitimate command has closed it.

At this point, readers may wonder why companies that deploy such capabilities do not simply isolate them from the Internet to make sure that attacks cannot be performed remotely. There are a few reasons why the remedy is not that simple:

- We live in an increasingly connected and global world. Remote operations monitoring often leads to a control room being thousands of miles away from the location of the equipment it monitors. Engineering an international private network would be costly. In theory, one can implement a secure, encrypted virtual private network that piggybacks on the public Internet, but organizations may not have the awareness or the expertise to put in place the right solutions. Furthermore, there are inherent vulnerabilities in the Internet Protocol, which was not designed with the current level of threat in mind.

- Solutions that were initially designed to be accessed from within the firewall have often been extended to provide outside access. A manufacturing manager may want to see a dashboard of their factory’s operations on their smartphone after dinner or when they get up. As soon as legitimate access is provided to one device from the outside, a potential port of illegitimate entry has been opened.

- Even if the industrial network is isolated from the outside, malware can be brought in through other methods. The Stuxnet attack, described in the next section, is a good example.

When we consider the opportunities for cyberattacks in the industrial world, and the potential severity of their consequences, we are reminded of the famous dialogue between reporter Mitch Ohnstad and serial bank robber Willie Sutton: “Why did you rob the bank, Willie?” “Because that’s where the money is!”

Sample IIoT Cyberattacks

In this section, we’ll look at three examples of Industrial Internet cyberattacks as a way to show the methods of attack, the types of vulnerabilities they exploited, and the potential consequences. These incidents also serve to show that IIoT vulnerabilities are nothing new.

The BTC Pipeline (2008)

A concrete example of how security vulnerabilities can escalate into physical disasters is provided by the explosion of the Baku-Tbilisi-Ceyhan (BTC) pipeline in 2008, a story fairly well known within the oil industry but not outside of it. According to the Wikipedia article on this accident:

On 5 August 2008, a major explosion and fire in Refahiye (eastern Turkey Erzincan Province) closed the pipeline. The Kurdistan Workers Party (PKK) claimed responsibility. The pipeline was restarted on 25 August 2008. There is circumstantial evidence that it was a sophisticated cyberattack on line control and safety systems that led to increased pressure and explosion. The attack might have been related to [the] Russo-Georgian War that started two days later.

At the time of the accident, the BTC pipeline capacity was 1 million barrels per day (it has since been increased), and the price of oil was at almost US $125 per barrel. With 20 days of lost deliveries, the potential economic damage if the pipeline had been running at full capacity could have reached $2.5 billion.

It seems that this was a two-pronged attack, electronic as well as physical. The attackers penetrated the monitoring and control system for the pipeline through the network of security cameras placed along the pipeline — cameras that in effect were nodes in an IP network but were installed without changing their default factory passwords. Having penetrated the network, the attackers disabled alarm systems. Finally, they either manipulated valves and compressors to increase the pressure until the pipeline blew up, or (in a more credible version) an explosives truck was detonated next to the pipeline.

This happened in 2008; that is, way before we started seriously talking about the Internet of Things, let alone the Industrial Internet. In fact, the BTC pipeline did not really include an analytics component, but the attack leveraged the presence of connected devices and took into account the presence of a remote control room.

Stuxnet (2010)

In 2010, the Stuxnet bot was introduced into Iranian nuclear research facilities and disabled a number of that country’s controversial uranium enrichment centrifuges. It was reportedly developed jointly by US and Israeli intelligence agencies for this specific purpose. Let’s look at some of the key aspects of this attack — how it happened and its impact.

There was a widespread belief, until Stuxnet was let loose, that programmable logic controllers (PLCs) used to control most industrial equipment were immune to viruses because these PLCs do not run a real operating system. But those PLCs, by definition, are programmable. And in an installation like the ones in Iran, the industrial control system connected to multiple PLCs runs Microsoft Windows and the Siemens Step 7 industrial control software. Therefore, infecting the host system was possible using the same techniques used to infect any Windows machine. Then, the Step 7 software could be subverted to reprogram the PLCs, causing them to execute incorrect instructions that would damage the equipment.

On the impact side, many people in the Western world might applaud the motive of the attackers. However, just as it is hard to contain a biological virus, this electronic one got loose and infected a number of systems in other countries. While 59% of the reported infections were in Iran, 18% were in Indonesia, and 1.5% in the US. Siemens had to distribute a detection and removal tool to its clients.

How was Stuxnet introduced into the presumably highly controlled environment of an Iranian nuclear research facility? Through a USB flash drive, handed out at an international conference to an attendee who wanted a copy of an interesting PowerPoint presentation, and whose PC was connected to the facility’s intranet.

Ukraine Utility Attack (2015)

We could go on with many more examples. This last one is worth mentioning because it is recent, which proves that little has changed since 2008, except perhaps the scale of the attacks and the sophistication of the perpetrators.

On 23 December 2015, several Ukrainian utility companies were disabled, cutting off power to hundreds of thousands of homes for several hours. There were multiple actions leading to the blackout, indicating long-term planning and a high degree of sophistication:

- A “spear phishing” attack (targeted social engineering) started as early as May 2015 and resulted in installing the KillDisk program in advance on the computers used by utility operators. The malware was left dormant until the time of the attack. KillDisk not only causes a PC to crash, it also prevents it from rebooting.

- The BlackEnergy malware was used to gain access to the utilities’ systems.

- At the time of the attack, three things took place simultaneously: the infiltrated control systems were told to “flip the switch” and disconnect power; KillDisk was activated to render the operators’ PCs useless; and a “telephone denial of service” attack was launched to flood the utilities’ call centers, preventing legitimate customers from reporting outages.

Because the operators could no longer use their PCs and had no real information on the extent of the outage, they had to physically travel to the various substations and manually reconnect the network.

Protecting Critical Infrastructures

As the saying goes, this situation is going to get worse before it gets better. First, the number of systems controlling expensive or potentially dangerous infrastructure (e.g., pipelines, power plants, the electrical grid, airports) is going to grow, and humans will be increasingly taken out of the loop, at least for routine operations, because of the complexity and speed of the decisions required to optimize operations.

Secondly, cyberwarfare skills have become available not only to intelligence agencies, but also to hackers pursuing various motives, who no longer need to be expert malware developers. Hacking groups sell software and services using the “dark Web,” often getting paid in Bitcoins. Facing this array of nefarious tools, we are still plagued with human and technical vulnerabilities such as the exchange of USB drives or clicking on an unknown attachment.

Finally, many groups have a stake in disrupting the operations of large industrial systems: actual terrorists, of course, but also intelligence agencies (as in the case of Stuxnet and probably of the Ukrainian attack), various criminal groups (perhaps looking to extract ransom payments), as well as “hacktivists” pursuing various ideological campaigns or trying to turn public opinion against certain policies and practices (e.g., nuclear energy, genetic engineering, offshore drilling).

Prosecutors look for three things in order to accuse someone of a crime: a motive, a weapon, and an opportunity. We just saw that all three are present: there are groups who want to do harm, they have a veritable catalog of malware at their disposal, and the systems they want to attack are not well protected enough. It is not surprising that attacks occur — it is actually surprising that there aren’t more.

Let’s now examine some of the approaches needed to get the situation under control.

Extending Identity and Access Management to the Industrial Internet

The identity and access management of IoT devices is not well handled today. When an enterprise adds a new human user (i.e., an employee or contractor), that person needs to be issued a username and password in order to access various systems. They can be asked to perform some of the registration activities, entering certain privileged information on a website in order to be assigned their credentials. A hardware device cannot do that. As noted above, physically connecting a new device to the network is usually all it takes for that device to start receiving and sending data.

Fixing this loophole requires that each device be given an identity that cannot be faked. One solution is to issue a public key infrastructure (PKI) certificate to each device and install that certificate on a tamper-proof electronic module. The device can then authenticate itself by responding to a challenge encoded using its public key. Conversely, the device must respond only to legitimate commands from a verifiable source. This means that the device needs to be able to issue its own challenge to the source of a request. Finally, if the device and the server both have PKI capabilities and certificates, they can encrypt the data they exchange in order to prevent eavesdropping.

Traditional Security Countermeasures

As we saw in the attacks described earlier, human fallibility is an important element of risk. As long as people plug a USB flash drive of unknown origin into their PC, or follow a hyperlink in a convincingly worded message, we can expect malware to penetrate at least regular computers used by people in their daily work.

The challenge, therefore, is to prevent further spreading of malware through defense-in-depth mechanisms. An industrial network should be isolated from the enterprise network to provide a second layer of defense. Intrusion prevention and detection systems should of course be run, and regular penetration tests conducted, although the proliferation of zero-day (previously unknown) exploits makes it hard to provide a 100% guarantee of protection.

The software running on enterprise and industrial systems is still plagued with common vulnerabilities such as buffer overflow, SQL injection, and so forth. Secure coding practices need to be taught and enforced, following in particular the work of MITRE and the Consortium for IT Software Quality (CISQ).

Strategy and Governance: The IT/OT Divide

The previous paragraphs could lead the reader to focus on technology solutions, but as usual in IT matters, the tools are unlikely to be effective absent good governance, strategy, and organization.

In many organizations, there is a schism between information technology (IT) — which is devoted to enterprise systems, collaboration tools, end-user devices, and user support — and operational technology (OT) — which includes manufacturing systems and the growing realm of the Industrial Internet. While some companies try to “put lipstick on the pig” by claiming that this division of responsibilities is a good thing, it really emerged for three negative reasons:

- IT developed a reputation, not altogether undeserved, that it doesn’t understand the business.

- IT has usually instituted a project management process that is the opposite of agility and is unable to respond to the changing needs of operations.

- IT personnel are trained in the development and operations of traditional enterprise systems, using general-purpose operating systems, programming languages, databases, and user interfaces — not in the integration of real-time cyber-physical systems running on resource-constrained, dedicated devices.

Once OT is separated from IT, however, it may not possess the skills necessary for security management in a hostile world. Operations personnel are very good at resolving problems quickly — potentially taking shortcuts that introduce vulnerabilities into their systems.

Organizations in which this schism between IT and OT exists should consider how to cross-pollinate the two sides with the necessary skills and processes so they can work better together to ensure the security of industrial systems. In some cases, the split should be reconsidered. This may require difficult decisions — for example, if the CIO of an industrial organization doesn’t know enough (or worse, doesn’t care) about the operations side of the company, perhaps they are the wrong person for the job.

Collaboration

The work of several organizations can be leveraged to help address the issues. I will mention just three of them:

- The IIC is preparing a “common security framework” to assess and improve the protection of industrial systems.

- The US Department of Homeland Security (DHS), recognizing the threat to critical infrastructure elements posed by IIoT vulnerabilities, has launched several initiatives, including an Industrial Control Systems Cyber Emergency Response Team (ICS-CERT).

- The Object Management Group (OMG) is evolving its Data Distribution Service (DDS) protocol to add a security layer as well as the capability to run on eXtremely resource-constrained environments (XRCE).

Adoption Challenges

None of these measures is free or easy to implement. There are multiple challenges to their adoption:

- Technically, the implementation of particular measures, such as device authentication and encrypted communications, requires at least a certain amount of computing power and memory at each device. The installed base of SCADA devices often lacks both features. Either those devices need to be replaced by more capable ones, or intermediary systems — often called “gateways” — will need to be installed to add the security management features to a cluster of dumb devices.

- Cost is an obvious factor, especially if implementing security requires replacing existing control systems and devices with newer ones or adding the gateways just mentioned. Deploying a PKI or similar capability with perhaps thousands of certificates — one per device in addition to one per server — also implies a significant cost.

- Retrofitting security on an existing installation may require interrupting operations.

- If an organizational change is required, or if operations management is ill-prepared to give security the priority it deserves, the difficulty and cost of change management should not be underestimated.

Recommendations and Conclusion

There is no such thing as absolute security. But since the Industrial Internet is likely to be a magnet for cyberattacks, just as banks were for Willie Sutton, organizations need to be prepared to defend themselves with all means at their disposal. If they fail to do so, their ability to conduct their basic business will be in jeopardy. A successful attack against a nuclear power plant or a refinery, followed by the likely discovery that negligence or naiveté played some part in making it possible, can definitely bankrupt the target of the attack or damage an entire industry.

The first step, as in the case of any operational threat, is to conduct a threat assessment. In the case of the BTC pipeline, a rigorous threat assessment of the pipeline system was only conducted after the fact.

The next step is to make IIoT systems more resilient by removing bad security practices that are not specific to these systems but can have more severe consequences in this domain. The errors fall into well-known categories: mistakes in software development, mistakes in system and security management, and vulnerability of users to social engineering practices.

A third step is to make sure that the governance and organization are in place to establish and track the goals, strategies, objectives, and tactics required to populate a plan of action.

Finally, technology can be introduced to strengthen the systems, and this step should leverage collaboration with leading organizations, such as the IIC, and industrial partners, such as the manufacturers of the IIoT equipment used in the network or system integrators with experience in this field.