CUTTER IT JOURNAL VOL. 29, NO. 7

Prepare for the unknown by studying how others in the past have coped with the unforeseeable and the unpredictable.

What does a quote by General Patton have to do with cyber and physical threats and the Internet of Things (IoT)?

After over 40 years of the Internet faithfully serving the needs of the Earth’s human population for information, communication, and entertainment, we have now entered the era of the IoT. Of course, when we refer to the Internet, we also mean the Web and therefore the Web of Things (WoT), where distributed applications benefitting from networking through the Internet are no longer a privilege of humans. Things can also take full advantage of the capabilities, simplicity, and potential of Web technologies and protocols. Following current developments in this field, it is not difficult to see the inevitability of the convergence of the two worlds, of humans and of things, each using the Internet as a primary means of communication. Possibly the most appropriate term to describe this evolution has been proposed by Cisco: the Internet of Everything (IoE), which “brings together people, process, data, and things to make networked connections more relevant and valuable than ever before.” In the IoE era, machines are equal to humans as Internet users.

In an ecosystem in which everything is connected, and where physical and cyber converge and collaborate, the threats of the two worlds not only coexist, but also converge, creating a still largely unknown environment, in which an attack in cyberspace can propagate and have an adverse effect in physical space and vice versa. So how can we be prepared for and confront this new unknown? How can we study and learn from the ways this has been dealt with in the past? First, it is important to simplify the problem by attempting to identify the components of IoE and the threats and effects an attack can have in each one.

Composing the Internet of Everything and Decomposing It to Its Threats

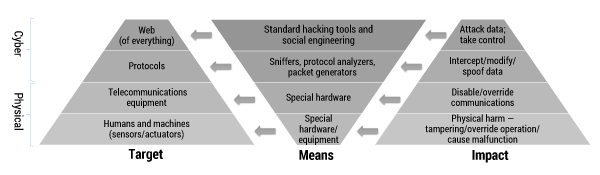

Let us pick up the thread of IoE evolution and follow it back to its origin, identifying its composing elements and corresponding threats in terms of attack impact and means to achieve it. We will not use the standard computer network practice of visualizing a layered hierarchical structure, as the one found in OSI or TCP/IP layers, but will structure the components based on their physical or virtual representation, with the cyberworld built on top of the physical (see Figure 1 below). In this representation, we can identify four different layers of components for the IoE:

- The lowest physical layer corresponds to the “everything” of the IoE, including humans and things (i.e., sensors, actuators, and embedded systems that combine them). This is the basis of the physical part of our representation, including all communicating members in the IoE ecosystem. The impact of a possible attack here can directly affect humans or things by causing an adverse physical effect to humans or operation of devices that does not comply with their specification or their users’ intentions. This may require special hardware and equipment that can affect the operation of machines and, through them, the physical privacy, convenience, or — in extreme cases — health and safety of human beings (e.g., as a result of a physical explosion in the context of the Industrial Internet or a malfunctioning life support medical device).

- The physical world representation is completed by the telecommunications equipment. In a connected world, we tend to take the telecommunications infrastructure for granted, focusing only on protocols and applications. Even though an attack at this level would require considerable access to resources, the results could be catastrophic, as the clear impact of such an attack would be to render all of the communication infrastructure incapable of operation in its intended way (or even to completely disable it). Such attacks are achieved through special hardware, which is able either to operate in a non-standard way (thereby overriding communications) or damage communication equipment (e.g., signal jammers, frequency transmitters).

- From the physical part of the IoE pyramid, we now move up to the cyber part, the lower layer of which consists of the communication protocols. This part is probably the most familiar to communication engineers, as attacks here target the transport of data in order to intercept it, modify it, and/or prevent it from reaching its intended destination, or simply to generate illegitimate traffic. As this is the most widely studied layer, there are several means and tools for achieving any kind of mischief in this space, such as protocol sniffers and analyzers, packet generators, and so on.

- The top layer is the most recently introduced, but also the one that is evolving the most quickly: the Web of Things. Here, the attacks no longer target the communication between IoE entities, but the actual purpose for the communication, focusing on the information/data and control. Depending on the target, standard hacking tools or social engineering can be used.

Based on these four layers, we refer to those attacks that target the sensing and telecommunications infrastructures as physical security breaches, while we refer to attacks that target the IoE at the level of the protocols and the WoT as cybersecurity breaches. The following is a brief overview of security threats that correspond to each of the four layers.

Physical Security Breaches

Sensing

Depending on the type of sensing technology a system uses, a capable adversary with physical access may attempt to deceive the sensor.1 For instance, some infrared sensors are known to have difficulty detecting objects hidden behind window glass. Wearing a costume made of foam or other material that absorbs sound waves can theoretically defeat ultrasonic motion sensors. Full-body scanners based on backscatter X-ray imaging technology might fail to detect a pancake-shaped plastic device with beveled edges taped to the abdomen, a firearm affixed to the outside of the leg and scanned against a dark background, and so on. These techniques for deceiving sensors used by physical security systems have been proven experimentally and documented publicly by researchers.

One particularly impressive example of such experimental attacks aimed to defeat lidar (Light Detection and Ranging) by producing an overwhelming number of spoofed echoes or objects. This could be loosely characterized as a sensory channel denial of service attack. A simpler but no less effective attack on cameras has been demonstrated by the same authors. By emitting light into a camera (e.g., with a laser pointer), they showed that it is possible to blind the camera by rendering it unable to tune auto exposure or camera gain, effectively hiding objects from its view.

Another interesting example is the use of sound-based attacks that aim to impede the ability of drones to maintain themselves in an upright position. This approach, described by researchers at the Korea Advanced Institute of Science and Technology, works by generating a sound at a frequency close to the resonant frequency of a drone’s micro-electro-mechanical-system gyroscope. If the gyroscope’s resonant frequency is within the audible range, as is the case for several types used in commercial drones, the sound causes resonance. This increases severely the standard deviation of the gyroscope’s output and consequently incapacitates the drone. The civilian drone used in their experiments crashed shortly after the attack every time.

Telecommunications

As telecom operators are rapidly embracing the cloud in order to improve their efficiency in operations, services rollout, and content storage and distribution, the need for cloud security is becoming critical, yet security strategies seem to be in place for only 50% of telecom companies. In addition, the emerging trend of “shadow IT” (i.e., the use of IT systems within an organization without the involvement of corporate IT) intensifies security and privacy risks, adding personal data protection requirements to the traditional challenge of uninterruptable service provision.

Cybersecurity Breaches

Protocols

In order to enable communication between things in the IoE, different protocols governing communication at all layers of the communication stack need to be deployed, each presenting different challenges in terms of security. Though the risks and impacts are well known (i.e., interception of communications, alteration of transmitted data, spoofing of information), the tools and means that attackers can use to achieve these impacts differ. As new protocols appear, new threats (in the form of old ones appearing in new clothes) arise, and countermeasures have to be reinvented. Unfortunately, there is no recipe for success here, as past experience is only to the advantage of the attackers. After all, you have to know the threat before you can confront it.

Web of Things

As in the case of the World Wide Web, attacks here may have little to do with the communication or even the computing infrastructure. The virtualization offered by the Web, where traditional services have been substituted by their “e-quivalents,” has opened a new world of cyber rather than physical threats. Instead of someone capturing something tangible and asking ransom for it, ransomware can block access to an electronic asset or service and ask for ransom in an automated manner. In the case of devices and things being part of the WoT, the situation can get even worse: seizing access to critical infrastructure could lead to serious hazards affecting public health or other key public sector services. With electronic devices controlling practically every critical infrastructure, from power grids to telecommunications and even elections, protecting the connected things over the Web from cyberattacks is critical.

An Overarching Threat: Deception

Traditionally, the attack avenue that has overcome most technical security measures is deception. In computer security, the term used is “semantic attack,” which is “the manipulation of user-computer interfacing with the purpose to breach a computer system’s information security through user deception.” Although not as precise, an umbrella term that is commonly used for both technical and nontechnical deception attacks of this sort is “social engineering.” Social engineering attacks can range from email phishing and infected website adverts to fraudulent Wi-Fi hotspots and USB devices with misleading labels, all of which aim to lure human users into disclosing private data, such as their password, or performing some compromising action such as downloading malware. The great strength of deception-based attacks is that technology security measures can be rendered irrelevant. For instance, it does not matter whether users have installed strong firewalls and antivirus systems in their home network if they themselves are fooled into downloading malware on the smartphone that they have linked to all their smart home devices.

To an extent, the concept of deception can be extended to physical threats. Examples would include most sensory channel attacks described in the previous section, as their aim is likewise to deceive (in this case a sensor rather than a user). Sensor-based systems are designed and operate with the assumption that they can trust their sensors to provide an honest — even if inaccurate— representation of their physical environment. Sensory channel attacks ensure that this is not the case.

Use Cases

To illustrate the extent and breadth of cyber and physical threats to the IoE, we have chosen to focus on the aspects that directly and comprehensively affect a citizen’s actual safety and perception of safety. In this section, we discuss the brief history, current state, and future of physical and cyber threats to automobility, the domestic environment, and well-being. For each one, we investigate whether General Patton’s approach of turning to (safety and security) history for solutions would help.

Automobility

Over the past seven years, attacks on modern connected cars have become a highlight in pretty much every high-profile security conference. It would not be an exaggeration to observe that there is such a thing as automotive cyber fatigue, with reports of different models of cars hacked making the news every week. In fact, it is seen as so obvious that citizens’ private cars will become targets of cyberattacks that researchers have already started studying the impact that future cybersecurity warnings will have on drivers.2 Will drivers interpret the warnings correctly? Will they be affected psychologically, and would that by itself compromise their safety? This is a concern in the IoE in general. It is accentuated in the automotive sector because of the imminent danger to drivers and passengers posed by a mere distraction from a security warning, let alone an extensive security breach affecting the engine or brakes.

But surely this is not the first time the automotive industry has had to deal with driver distraction from warnings. The European Union (EU), US, and several countries have developed rigorous guidelines for the provision of information to drivers in a manner that reduces driver distraction by taking into account single glance duration, amount and priority of information, and so forth. In 2015 the International Organization of Motor Vehicle Manufacturers issued a recommended worldwide distraction guideline policy, which can be adopted by engineers developing mechanisms and content for in-car cybersecurity warnings as well. It is also not the first time the automotive security sector has dealt with command injection attacks that render critical systems unavailable. For example, large-scale EU-funded projects, such as FP7 EVITA, have provided practical solutions for securing the communication and embedded computation of modern cars since the previous decade, long before concerns about the cybersecurity of cars reached the mainstream media. The increased vulnerability of modern cars to cyber-physical threats due to recently introduced technologies can be addressed with existing security mechanisms, as long as the buyer is willing to pay for them. So, it is more a matter of price elasticity than of availability of cyberprotection solutions for cars.

Currently, sensory channel attacks can be considered too exotic to be a significant concern. This is expected to gradually change as automation and reliance on sensing increases, especially in the automotive sector. A prime example is the driverless car, which is expected to become a commercial reality in the next decade. As driverless cars depend heavily on lidar, a low-cost device that generated volumes of misleading fake objects would render them completely unable to operate. This would be a very intelligent type of attack without doubt, but from the perspective of automotive safety, the end result is an unreliable or unavailable sensor. If one ignores for a moment the malicious intent, this is just one more case of a sensor reliability problem, which mathematicians, computer scientists, physicists, and engineers have addressed in great detail over several decades. After all, given the adverse environment in which a car’s sensor operates, it is much more likely to fail naturally than as a result of a sensory channel attack. Therefore, redundancy (i.e., using more than one sensor for the same data collection, often supported by an algorithm for estimating a sensor’s predicted measurements) and diversity (i.e., using more than one type of sensor for the same problem and cross-correlating their data) are natural solutions for cyber-physical resilience, too.

The Domestic Environment

Most smart home devices are small and inexpensive. They frequently have to offload their processing and storage to a cloud environment and rely on the home router for security, unable to provide any sophisticated onboard protection mechanism. On a real-world commercial level, security is usually limited to a simple authentication mechanism, typically a password, which is often left to its factory default, in addition to HTTPS encryption of network traffic. Guessing or stealing the password via commonplace social engineering semantic attacks or compromising any of the control interfaces of the smart home, such as the owner’s smartphone or a corresponding cloud-based interface, is sufficient to take full control of its devices. In practice, it has been argued that a typical smart home’s only real protection is the fact that network address translation (NAT) prevents individual devices from being directly visible on the Internet and that the number of devices globally is still not large enough to economically justify the focus of organized cybercriminals. This is rapidly changing. The total number of connected devices (including smart homes, wearables, and other smart devices) is projected to grow to between 19 and 40 billion globally by 2019.

Yet the reality is that almost all actual cybersecurity breaches publicly reported in this context have involved no extraordinary effort or advanced hacking techniques and would have been prevented if common sense and age-old security design principles, such as those proposed by Jerome Saltzer and Michael Schroeder in the 1970s, had been followed (see sidebar). Take, for instance, the principle of the least common mechanism. Companies that develop cameras for home automation systems tend to reuse their code for multiple versions and multiple models, but assumptions originally made about their use may no longer be valid as more features are added, leading to libraries with excess features and security holes. Similarly, such principles as economy of mechanism and minimization of attack surface are commonly violated by smart lock developers. For example, in a home with multiple door entrances (e.g., a main one with a smart lock and a conventional one from the garage), a user may accidentally unlock more doors than the one they used when returning home. That is because of the (possibly excessive) auto-unlock feature, which unlocks a door when the user is returning home and is within 10 meters (for Bluetooth low energy) of the door. However, just because a user is near a door does not necessarily mean that they intend to unlock it.

Similar examples of violations of the basic security design principles can be found behind most security failures in domestic environments. The problem is that embedded system developers simply do not know them. In fact, these principles (as well as other relevant subjects, such as wireless networks and cloud computing) are often omitted from the curricula of software engineering bachelor’s degree programs. Making sure that basic security design principles are included in software developers’ education and expected by standardization bodies would be sufficient to prevent the vast majority of security breaches without needing to develop new security technologies.

- Economy of mechanism. Keep the design as simple and small as possible.

- Fail-safe defaults. Base access decisions on permission rather than exclusion.

- Complete mediation. Every access to every object must be checked for authority.

- Open design. The design should not be secret.

- Separation of privilege. Where feasible, a protection mechanism that requires two keys to unlock it is more robust and flexible than one that allows access to the presenter of only a single key.

- Least privilege. Every program and every user of the system should operate using the least set of privileges necessary to complete the job.

- Least common mechanism. Minimize the amount of mechanism common to more than one user and depended on by all users.

- Psychological acceptability. It is essential that the human interface be designed for ease of use, so that users routinely and automatically apply the protection mechanisms correctly.

Well-Being and Healthcare

The episode of the TV drama Homeland in which terrorists assassinate a senator by tampering with his pacemaker (accelerating his heartbeat) was seen by many as far-fetched, but it was a scenario that former US Vice President Dick Cheney considered sufficiently realistic in 2007 to ask his doctors to disable his pacemaker’s wireless functionality. This was long before “connected healthcare” and today’s numerous options of available wearable devices. In fact, the term “wearable” already falls too short in describing the variety of devices capable of monitoring and wirelessly reporting on well-being and personal health status. If we were to correctly identify all options, then we should also add implantable, adhesive, patched, and even ingestible devices. Regarding the latter, the US Food and Drug Administration (FDA) recently declined to approve the first mass-market drug incorporating an ingestible sensor (a collaboration between Proteus and Otsuka Pharmaceuticals), instead requesting more tests and “data regarding the performance of the product under the conditions in which it is likely to be used, and further human factors investigations.”

Despite this temporary regulatory roadblock, it is inevitable that personalized, daily monitoring of our health conditions and well-being will be carried out through the use of one or more devices, while it is also only a matter of time and acclimation before we widely accept a more active role for these devices: insulin pumps, drug infusion pumps, and pacemakers with integrated defibrillators. The FDA has already acknowledged the criticality of the situation, issuing recommendations on how manufacturers should safeguard medical devices against cyberbreaches, making security by design a top priority.

In terms of safety criticality, there appears to be a distinction between devices that are related to health issues (e.g., a blood pressure monitor device and or app) and those that relate to well-being (e.g., calorie calculators, apps to help you cut smoking), but even this is debatable. Imagine your bedside device, programmed to lull you to sleep through the gentle sound of waves and wake you up at the right time (based on your sleep cycle) with the sweet sound of a nightingale. It does not take more than compromising your IFTTT account to change the settings so that at 3 am you’re woken up to a loud AC/DC power riff. Or consider a compromised training app that pushed you to speed up for the last mile on your jogging run, instead of stopping you based on your (increased beyond normal) heart rate readings. If you are in good health, these breaches would simply be nuisances, but for a person with a heart condition, they could be life-threatening incidents.

So where in security practice do we turn for inspiration when it comes to protecting our well-being and health from cyberattacks? Suitably, it is probably more about hygiene (i.e., cyberhygiene) than anything else. Medical devices in hospitals are notorious for relying on very old operating systems (e.g., Windows XP in network-connected MRI machines) that have long been discontinued in every other industry and are no longer supported officially. It is not that demanding to expect medical software developers to provide updates in the same way as the rest of the IT industry, but will hospitals install them? Introduction of cyberhygiene training would be the minimum requirement for the needed culture shift. It would also help if medical personnel refrained from leaving Post-it Notes with their shared account passwords on hospital computers.

Then, there is privacy in wearables. This is commonly based on proprietary/secret cryptography methods, so as to tick the “encryption” box, but closed cryptography is never as robust as open cryptography because it has not been subjected to the same level of scrutiny. It is a key principle in cryptography, set by Dutch cryptographer Auguste Kerckhoffs in the 1880s, that a cryptographic system “must not be required to be secret, and it must be able to fall into the hands of the enemy without inconvenience.” Once again, following cyberhygiene and age-old security principles should be sufficient to thwart most realistic cyber-physical threats.

Parting Thoughts

Security is about protecting from realistic threats that require realistic effort. Protecting against extraordinary threats that require extraordinary effort has never been a goal in this sector, because it would simply never be practical. The advent of the IoT, and soon the IoE, has undoubtedly expanded the attack surface and the range of our daily life activities that are affected. By supporting devices rather than being supported by devices, the Internet of Everything may be much larger than the Internet we have become used to, but it is still the Internet. If we have been able to produce trustworthy communication over basic communication infrastructure and network protocols designed in the 1970s, there is little fundamentally new in terms of emerging threats in the IoE. It is still about protecting systems and networks by following security principles that have withstood the test of time, whether these are the original principles by Saltzer and Schroeder, modern cybersecurity hygiene practices, or mere common sense, preferably cultivated through security education for software developers and awareness programs for users.

Endnotes

1Loukas, George. Cyber-Physical Attacks: A Growing Invisible Threat. Butterworth-Heinemann (Elsevier), 2015.

2Altschaffel, Robert, et al. “Simulation of Automotive Security Threat Warnings to Analyze Driver Interpretations and Emotional Transitions.” Chapter 5 in Computer Safety, Reliability, and Security, edited by Floor Koornneef and Coen van Gulijk. Springer, 2015.