CUTTER BUSINESS TECHNOLOGY JOURNAL VOL. 31, NO. 2

Keng Siau and Weiyu Wang examine prevailing concepts of trust in general and in the context of AI applications and human-computer interaction in particular. They discuss the three types of characteristics that determine trust in this area: human, environment, and technology. They emphasize that trust building is a dynamic process and outline how to build trust in AI systems in two stages: initial trust formation and continuous trust development.

In 2016, Google DeepMind’s AlphaGo beat 18-time world champion Lee Sedol in the abstract strategy board game Go. This win was a triumphant moment for artificial intelligence (AI) that thrusted AI more prominently into the public view. Both AlphaGo Zero and AlphaZero have demonstrated the enormous potential of artificial intelligence and deep learning. Furthermore, self-driving cars, drones, and home robots are proliferating and advancing rapidly. AI is now part of our everyday life, and its encroachment is expected to intensify.1 Trust, however, is a potential stumbling block. Indeed, trust is key in ensuring the acceptance and continuing progress and development of artificial intelligence.

In this article, we look at trust in artificial intelligence, machine learning (ML), and robotics. We first review the concept of trust in AI and examine how trust in AI may be different from trust in other technologies. We then discuss the differences between interpersonal trust and trust in technology and suggest factors that are crucial in building initial trust and developing continuous trust in artificial intelligence.

What Is Trust?

The level of trust a person has in someone or something can determine that person’s behavior. Trust is a primary reason for acceptance. Trust is crucial in all kinds of relationships, such as human-social interactions,2 seller-buyer relationships,3 and relationships among members of a virtual team. Trust can also define the way people interact with technology.4

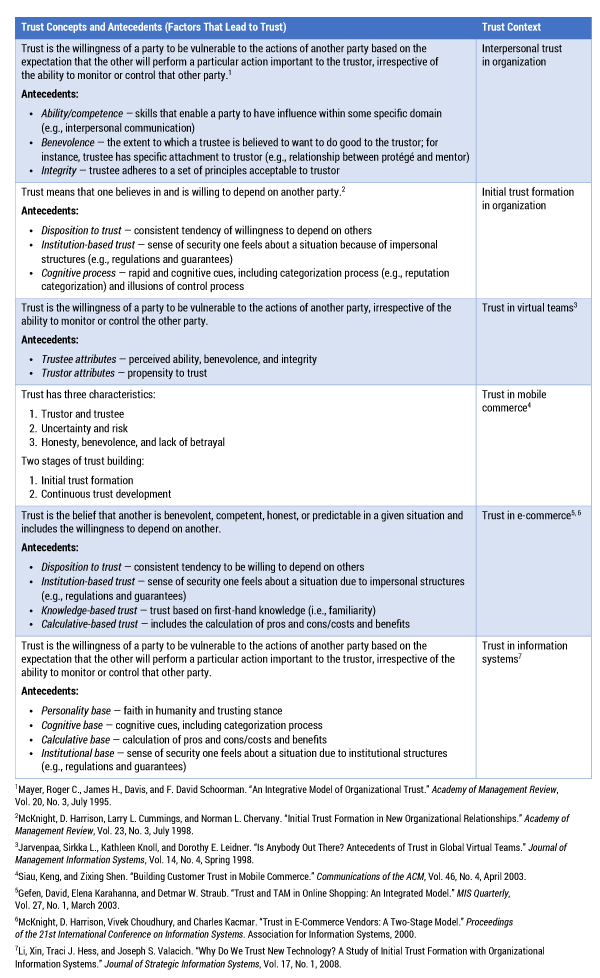

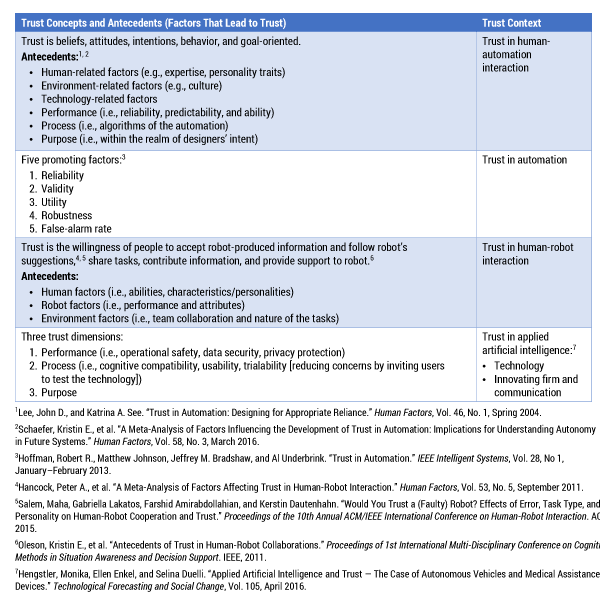

Trust is viewed as: (1) a set of specific beliefs dealing with benevolence, competence, integrity, and predictability (trusting beliefs); (2) the willingness of one party to depend on another in a risky situation (trusting intention); or (3) the combination of these elements. Table 1 lists some concepts and antecedents of trust in interpersonal relationships and trust people have toward specific types of technology, such as mobile technology and information systems. The conceptualization of trust in human-machine interaction is, however, slightly different (see Table 2).

Table 1 — Conceptualization of trust and its antecedents.

Table 2 — Trust conceptualization in human-machine interaction.

Compared to trust in an interpersonal relationship, in which the trustor and trustee are both humans, the trustee in a human-technology/human-machine relationship could be either the technology per se and/or the technology provider. Further, trust in technology and trust in the provider will influence each other (see Figure 1).

Trust is dynamic. Empirical evidence has shown that trust is typically built up in a gradual manner, requiring ongoing two-way interactions.5 However, sometimes a trustor can decide whether to trust the trustee, such as an object or a relationship, before getting firsthand knowledge of the trustee — or having any kind of experience with the trustee. For example, when two persons meet for the first time, the first impression will affect the trust between these two persons. In such situations, trust will be built based on an individual’s disposition or institutional cues. This kind of trust is called initial trust, which is essential for promoting the adoption of a new technology. Both initial trust formation and continuous trust development deserve special attention. In the context of trust in AI, both initial trust formation and continuous trust development should be considered.

What Is the Difference Between Trust in AI and Trust in Other Technologies?

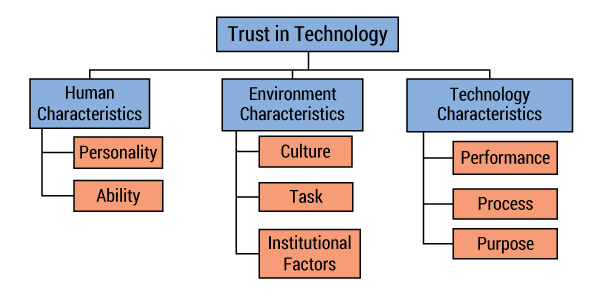

Trust in technology is determined by human characteristics, environment characteristics, and technology characteristics. Figure 2 shows the factors and dimensions of trust in technology.

Human characteristics basically consider the human’s personality, the trustor’s disposition to trust, and the trustee’s ability to deal with risks. The trustor’s personality or disposition to trust could be thought of as the general willingness to trust others, and it depends on different experiences, personality types, and cultural backgrounds. Ability usually refers to a trustee’s competence/group of skills to complete tasks in a specific domain. For instance, if an employee is very competent in negotiation, the manager may trust the employee when he or she takes charge of negotiating contracts.

Environment characteristics focus on elements such as the nature of the tasks, cultural background, and institutional factors. Tasks can be of different natures. For example, a task can be very important or a task can be trivial. Cultural background can be based on ethnicity, race, religion, and socioeconomic status. Cultural background can also be associated with a country or a particular region. For instance, Americans tend to trust strangers who share the same group memberships, and Japanese tend to trust those who share direct or indirect relationship links. Institutional factors indicate the impersonal structures that enable one to act in anticipation of a successful future endeavor. Institutional factors, according to literature, include two main aspects: the situational normality and structural assurances. Situational normality means the situation is normal, and everything is in proper order. Structural assurances refer to the contextual conditions such as promises, contracts, guarantees, and regulations.

No matter who or what the trustee is, whether it is a human, a form of AI, or an object such as an organization or a virtual team, the impact of human characteristics and environment characteristics will be roughly similar. For instance, a person with a high-trusting stance would be more likely to accept and depend on others, such as a new technology or a new team member. Similarly, it will be easier for a technology provided by an institution/organization with a high reputation to gain trust from users than it would be for a similar technology from an institution/organization without such a reputation.

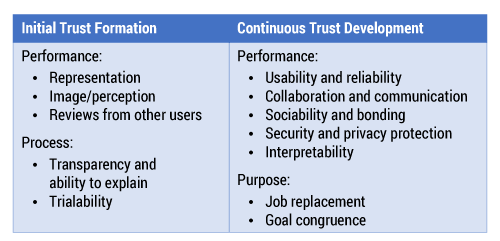

Technology characteristics can be analyzed from three perspectives: (1) the performance of the technology, (2) its process/attributes, and (3) its purpose. Although human and environment characteristics are fairly similar irrespective of trustee, the technology characteristics impacting trust will be different for AI, ML, and robotics than they are for other objects or humans. Since artificial intelligence has many new features compared to other technologies, its performance, process, and purpose need to be defined and considered. Using a two-stage model of trust building, Table 3 shows the technology features related to AI’s performance, process, and purpose, and their impact on trust.

Table 3 — Technology features of AI that affect trust building.

Building Initial Trust in AI

Several factors are at play during trust building. These factors include the following:

-

Representation. Representation plays an important role in initial trust building, and that is why humanoid robots are so popular. The more a robot looks like a human, the easier people can establish an emotional connection with it. A robot dog is another example of an AI representation that humans find easier to trust. Dogs are human’s best friends and represent loyalty and diligence.

-

Image/perception. Sci-fi books and movies have given AI a bad image — when the intelligence we create gets out of control. Artificial general intelligence (AGI) or “strong AI”6 can be a serious threat. This image and perception will affect people’s initial trust in AI.

-

Reviews from other users. Reading online reviews is common these days. Compared with a negative review, a positive review leads to greater initial trust. Reviews from other users will affect the initial trust level.

-

Transparency and “explainability.” To trust AI applications, we need to understand how they are programmed and what function will be performed in certain conditions. This transparency is important, and AI should be able to explain/justify its behaviors and decisions. One of the challenges in machine learning and deep learning is the black box in the ML and decision-making processes. If the explainability of the AI application is poor or missing, trust is affected.

-

Trialability. Trialability means the opportunity for people to have access to the AI application and to try it before accepting or adopting it. Trialability enables enhancement of understanding. In an article in Technological Forecasting and Social Change, Monika Hengstler et al. state that “when you survey the perception of new technologies across generations, you typically see resistance appear from people who are not users of technology.” Thus, providing chances for potential users to try the new technology will promote higher initial trust.

Developing Continuous Trust in AI

Once trust is established, it must be nurtured and maintained. This happens through the following:

-

Usability and reliability. Performance includes the competence of AI in completing tasks and finishing those tasks in a consistent and reliable manner. The AI application should be designed to operate easily and intuitively. There should be no unexpected downtime or crashes. Usability and reliability contribute to continuous trust.

-

Collaboration and communication. Although most AI applications are developed to perform tasks independently, the most likely scenario in the short term is that people will work in partnership with intelligent machines. Whether collaboration and communication can be carried out smoothly and easily will affect continuous trust.

-

Sociability and bonding. Humans are social animals. Continuous trust can be enhanced with social activities. A robot dog that can recognize its owner and show affection may be treated like a pet dog, establishing emotional connection and trust.

-

Security and privacy protection. Operational safety and data security are two eminent factors that influence trust in technology. People are unlikely to trust anything that is too risky to operate. Data security, for instance, is important because machine learning relies on large data sets, making privacy a concern.

-

Interpretability. With a black box, most ML models are inscrutable. To address this problem, it is necessary to design interpretable models and provide the ability for the machine to explain its conclusions or actions. This could help users understand the rationale for the outcomes and the process of deriving the results. Transparency and explainability, as discussed in initial trust building, are important for continuous trust as well.

-

Job replacement. Artificial intelligence can surpass human performance in many jobs and replace human workers. AI will continue to enhance its capability and infiltrate more domains. Concern about AI taking jobs and replacing human employees will impede people’s trust in artificial intelligence. For example, those whose jobs may be replaced by AI may not want to trust it. Some predict that more than 800 million workers, about a fifth of the global labor force, might lose their jobs soon. Lower-skilled, repetitive, and dangerous jobs are among those likely to be taken over by machines. Providing retraining and education to affected employees will help mitigate this effect on continuous trust.

-

Goal congruence. Since artificial intelligence has the potential to demonstrate and even surpass human intelligence, it is understandable that people treat it as a threat. And AI should be perceived as a potential threat, especially AGI! Making sure that AI’s goals are congruent with human goals is a precursor in maintaining continuous trust. Ethics and governance of artificial intelligence are areas that need more attention.

Practical Implications and Conclusions

Artificial intelligence is here, and AI applications will become more and more prevalent. Trust is crucial in the development and acceptance of AI. In addition to the human and environment characteristics, which affect trust in other humans, objects, and AI, trust in AI, ML, and robotics is affected by the unique technology features of artificial intelligence.

To enhance trust, practitioners can try to maximize the technological features in AI systems based on the factors listed in Table 3. The representation of an AI as a humanoid or a loyal pet (e.g., dog) will facilitate initial trust formation. The image and perception of AI as a terminator (like in the Terminator movies) will hinder initial trust. In this Internet age, reviews are critical, as well as the ability of artificial intelligence to be transparent and able to explain its behavior/decisions. These are important for initial trust formation. The ability to try out AI applications will also have an impact on initial trust.

Trust building is a dynamic process, involving movement from initial trust to continuous trust development. Continuous trust will depend on the performance and purpose of the artificial intelligence. AI applications that are easy to use and reliable — and can collaborate and interface well with humans, have social ability, facilitate bonding with humans, provide good security and privacy protection, and explain the rationale behind conclusions or actions — will facilitate continuous trust development. A lack of clarity over job replacement and displacement by AI along with AI’s potential threat to the existence of humanity breed distrust and hamper continuous trust development.

Trust is the cornerstone of humanity’s relationship with artificial intelligence. Like any type of trust, trust in AI takes time to build, seconds to break, and forever to repair once it is broken!

1 See “Impact of Artificial Intelligence, Robotics, and Automation on Higher Education” and “Impact of Artificial Intelligence, Robotics, and Machine Learning on Sales and Marketing.”

2 See “Applied Artificial Intelligence and Trust — The Case of Autonomous Vehicles and Medical Assistance Devices” and “Initial Trust Formation in New Organizational Relationships.”

3 See “E-Commerce: The Role of Familiarity and Trust” and “Building Customer Trust in Mobile Commerce.”

4 See “Why Do We Trust New Technology? A Study of Initial Trust Formation with Organizational Information Systems” and “A Qualitative Investigation on Consumer Trust in Mobile Commerce.”

5 See “E-Commerce: The Role of Familiarity and Trust” and “Initial Trust Formation in New Organizational Relationships.”

6 AGI or “strong AI” refers to the AI technology that can perform almost (if not) all the intellectual tasks that a human being can.